-

Notifications

You must be signed in to change notification settings - Fork 16.4k

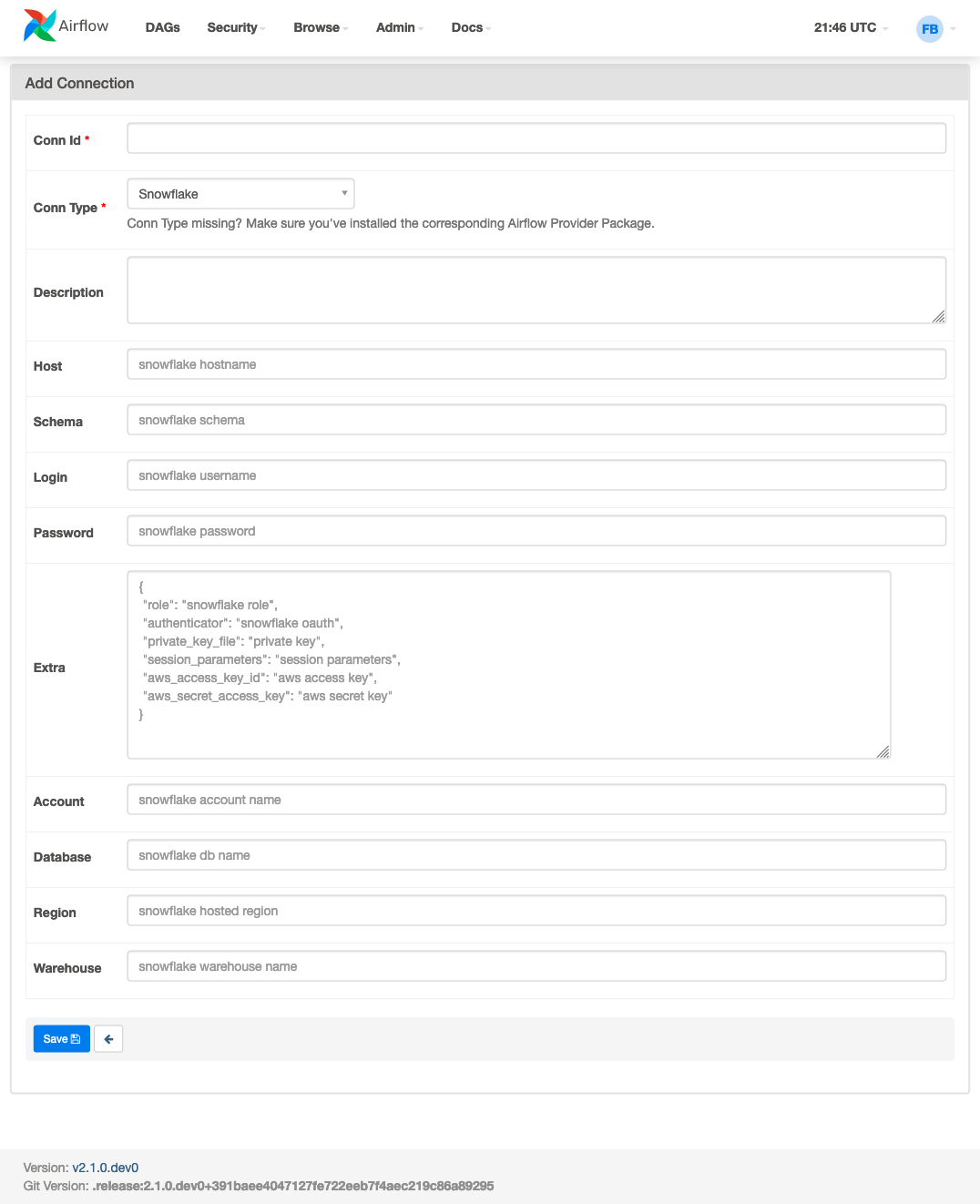

Add Dynamic fields to Snowflake Connection #14631

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add Dynamic fields to Snowflake Connection #14631

Conversation

Paradigma is a technology consultancy company located in Spain that are using airflow in various projects

`449b4072c2da_increase_size_of_connection_extra_field_.py` does not exist in 2.0.1

so we need to update down_migration

```

INFO [alembic.runtime.migration] Running upgrade 2c6edca13270 -> 61ec73d9401f, Add description field to connection

INFO [alembic.runtime.migration] Running upgrade 61ec73d9401f -> 64a7d6477aae, fix description field in connection to be text

INFO [alembic.runtime.migration] Running upgrade 64a7d6477aae -> e959f08ac86c, Change field in DagCode to MEDIUMTEXT for MySql

INFO [alembic.runtime.migration] Running upgrade e959f08ac86c -> 82b7c48c147f, Remove can_read permission on config resource for User and Viewer role

[2021-02-09 19:17:31,307] {migration.py:555} INFO - Running upgrade 82b7c48c147f -> 449b4072c2da, Increase size of connection.extra field to handle multiple RSA keys

```

When the image is prepared, PIP installation produces progress bars which are annoying - especially in the CI environment. This PR adds argument to control progress bar and sets it to "off" for CI builds.

When using the KubernetesPodOperator with log_events_on_failure=True, and the pod exits, the executor needs to access the events in the namespace. Before this resulted in an exception when using the chart as this it was not permitted by the pod-launcher role.

"airflow-tenant-primary" is a bit confusing, and the examples don't match up in the configuration file example. fix "connections_prefix" and "variables_prefix" mixup in first unordered list in "Managing Secrets" section make "variable_prefix" plural for consistency

When a one-time job is created with `CloudDataTransferServiceS3(GCS)ToGCSOperator`, the job remains on the GCP console even after the job is completed. This is a specification of the data transfer service, but I would like to add this parameter because there are normally cases where don't want to leave a one-time job.

…schedule (apache#14048) Clearing large number of tasks takes a long time. Most of the time is spent at this line in clear_task_instances (more than 95% time). This slowness sometimes causes the webserver to timeout because the web_server_worker_timeout is hit. ``` # Clear all reschedules related to the ti to clear session.query(TR).filter( TR.dag_id == ti.dag_id, TR.task_id == ti.task_id, TR.execution_date == ti.execution_date, TR.try_number == ti.try_number, ).delete() ``` This line was very slow because it's deleting TaskReschedule rows in a for loop one by one. This PR simply changes this code to delete TaskReschedule in a single sql query with a bunch of OR conditions. It's effectively doing the same, but now it's much faster. Some profiling showed great speed improvement (something like 40 to 50 times faster) compared to the first iteration. So the overall performance should now be 300 times faster than the original for loop deletion.

Two quick fixes to Dataproc operators and hooks. Add more templated fields to the DataprocClusterDeleteOperator as per apache#13454. There were a few other fields which could easily be templated so I added them as well. Don't use the global-dataproc.googleapis.com:443 URL when creating dataproc clients. This was partially done in apache#12907 but the other two client creation methods were not updated. Using the global-dataproc URL results in 404s when trying to create clusters in the global region. We don't need to specify the default endpoint as it is used by default in the dataproc client library: https://github.com/googleapis/python-dataproc/blob/6f27109faf03dd13f25294e57960f0d9e1a9fa27/google/cloud/dataproc_v1beta2/services/cluster_controller/client.py#L117

same as apache#13458 but for `82b7c48c147f_remove_can_read_permission_on_config_.py` migration This migration changes logging handlers so each next migration is differently formatted when doing airflow db reset. This commit fixes this behavior.

…4183) closes apache#13971 The value of `[webserver] cookie_samesite` was changed to `Lax` in >=2.0 from `''` (empty string) in 1.10.x. This causes the following error for users migrating from 1.10.x to 2.0 if the old airflow.cfg already exists. ``` Traceback (most recent call last): File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 2447, in wsgi_app response = self.full_dispatch_request() File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 1953, in full_dispatch_request return self.finalize_request(rv) File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 1970, in finalize_request response = self.process_response(response) File "/usr/local/lib/python3.9/site-packages/flask/app.py", line 2269, in process_response self.session_interface.save_session(self, ctx.session, response) File "/usr/local/lib/python3.9/site-packages/flask/sessions.py", line 379, in save_session response.set_cookie( File "/usr/local/lib/python3.9/site-packages/werkzeug/wrappers/base_response.py", line 468, in set_cookie dump_cookie( File "/usr/local/lib/python3.9/site-packages/werkzeug/http.py", line 1217, in dump_cookie raise ValueError("SameSite must be 'Strict', 'Lax', or 'None'.") ValueError: SameSite must be 'Strict', 'Lax', or 'None'.** ``` This commit takes care of it by using `Lax` when the value is empty string (``)

Catches Name Error on importing optional kubernetes package.

* Rework client-side script for connection form. Before this, fields on the connection form were dynamically changed by adding and removing the "hide" CSS class. If a provider were to add a field that requires validation (e.g. InputRequired), or if a different field type was used (e.g. NumberField), the entire form would be unsaveable, even if the currently selected connection type had nothing to do with that provider. This change takes a different approach; upon loading, the DOM elements for all extra fields are saved into a Map, then removed from the DOM tree. When another connection type is selected, these elements are restored into the DOM. * Use template string literals in connection form. Co-authored-by: Ryan Hamilton <ryan@ryanahamilton.com> Co-authored-by: Ryan Hamilton <ryan@ryanahamilton.com>

Co-authored-by: Jarek Potiuk <jarek@potiuk.com> Co-authored-by: Kamil Breguła <mik-laj@users.noreply.github.com>

…#14177) Co-authored-by: Arati Nagmal <arati@stepfunction.ai>

This extracts the "run this command" logic from the BashOperator in to a reusable hook. Co-authored-by: Daniel Standish <dstandish@users.noreply.github.com> Co-authored-by: Tomek Urbaszek <turbaszek@gmail.com> Co-authored-by: Ash Berlin-Taylor <ash_github@firemirror.com>

Rename GCSObjectsWtihPrefixExistenceSensor to GCSObjectsWithPrefixExistenceSensor

…or CI (apache#14196) Generic Sphinx errors that were unrelated to spelling were being raised as spelling errors leading to potential confusion. Reversing the order of the build (i.e. running the docs build before the spelling build) will catch such errors and more appropriately denote the issue as a build issue and not a spelling issue. Additionally, the error message for Sphinx errors unrelated to spelling has been updated to be more clear for such cases. closes: apache#14051

- Move "Guidelines to become an Airflow Committer" before "Guidelines for promoting Committers to Airflow PMC" - Remove "Becoming a Committer" section which is same as "Guidelines to become an Airflow Committer"

…ache#14026) Co-authored-by: uma6 <> Co-authored-by: Ephraim Anierobi <splendidzigy24@gmail.com>

Yes. I raised this because proposal 1 means we recognize that the aws secret should be saved in the extra field. |

Without this, Webserver fails with:

```

[2021-03-09 18:55:19,640] {base.py:122} INFO - POST http://aa.aa:9200/_count [status:200 request:0.142s]

[2021-03-09 18:55:19 +0000] [64] [ERROR] Error handling request

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/gunicorn/workers/sync.py", line 181, in handle_request

for item in respiter:

File "/usr/local/lib/python3.7/site-packages/werkzeug/wsgi.py", line 506, in __next__

return self._next()

File "/usr/local/lib/python3.7/site-packages/werkzeug/wrappers/base_response.py", line 45, in _iter_encoded

for item in iterable:

File "/usr/local/lib/python3.7/site-packages/airflow/utils/log/log_reader.py", line 84, in read_log_stream

logs, metadata = self.read_log_chunks(ti, current_try_number, metadata)

File "/usr/local/lib/python3.7/site-packages/airflow/utils/log/log_reader.py", line 58, in read_log_chunks

logs, metadatas = self.log_handler.read(ti, try_number, metadata=metadata)

File "/usr/local/lib/python3.7/site-packages/airflow/utils/log/file_task_handler.py", line 217, in read

log, metadata = self._read(task_instance, try_number_element, metadata)

File "/usr/local/lib/python3.7/site-packages/airflow/providers/elasticsearch/log/es_task_handler.py", line 186, in _read

and offset >= metadata['max_offset']

TypeError: '>=' not supported between instances of 'str' and 'int'

```

|

So do we think it makes sense to expose the AWS secret as a separate optional field on the form? A bit counter-intuitive, but I don't see any other way to design around it without building a feature that allows the user to encrypt extras. Edit: Unless we think it's worth investing in building a |

After recent snowflake provider upgrade (apache#14655), a typo was introduced in the urllib that prevented it to upgrade constraints automatically. This PR restores the correct name of the urllib library.

…he#14380) * Handle misformed env vars without crashing * Include traceback in celery_executor error log * Add test for airflow/configuration.py::as_dict

…ache#13209) * Upgrade KEDA to v2.0.0 and add support for worker persistence KEDA 2.0.0 introduces support for StatefulSets, so we can now remove the constraint that worker persistence must be disabled. Co-authored-by: Daniel Standish <dstandish@users.noreply.github.com>

Bumps [elliptic](https://github.com/indutny/elliptic) from 6.5.3 to 6.5.4. - [Release notes](https://github.com/indutny/elliptic/releases) - [Commits](indutny/elliptic@v6.5.3...v6.5.4) Signed-off-by: dependabot[bot] <support@github.com> Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

closes: apache#11929 This PR includes a new datetime branching operator: the current date and time, as given by datetime.datetime.now is compared against target datetime attributes, like year or hour, to decide which task id branch to take.

python/cpython#24297 change was included in Python 3.8.8 to fix a vulnerability (bpo-42967) Depending on which Base Python Image is run in our CI, two of the tests can fail or succeed. Our Previous two attempts: - apache@061cd23 - apache@49952e7 We might for a while get different base python version depending on the changes of a PR (whether or not it includes a change to dockerfiler). a) when you have PR which do not have changes in the Dockerfile, they will use the older python version as base (for example Python 3.8.7) b) when you have PR that touches the Dockerfile and have setup.py changes in master, it should pull Python 3.8.8 first.

…14710) Turns out apache#14698 did not fix the issue as Master failed again. After digging a bit more I found that the CVE was fixed in all Python versions: 3.6.13, 3.7.10 & 3.8.8 The solution in this PR/commit checks the `parse_qsl` behavior with following tests: ``` ❯ docker run -it python:3.8-slim bash root@41120dfd035e:/# python Python 3.8.8 (default, Feb 19 2021, 18:07:06) >>> from urllib.parse import parse_qsl >>> parse_qsl(";a=b") [(';a', 'b')] >>> ``` ``` ❯ docker run -it python:3.8.7-slim bash root@68e527725610:/# python Python 3.8.7 (default, Feb 9 2021, 08:21:15) >>> from urllib.parse import parse_qsl >>> parse_qsl(";a=b") [('a', 'b')] >>> ```

* Add initial codeowners for new UI directory * Add inititial "area:UI" labeling automation

Having it doesn't cause any harm, but it was already set five commands further up to the same value

…n. (apache#14718) Using this has two draw-backs for us. 1. MEMBER applies to _anyone in the org_, not just members/commiters to this repo 2. The value of this setting depends upon the user's "visiblity" in the org. I.e. if they hide their membership of the org, the author_association will show up as "CONTRIBUTOR" instead. Both of these combined mean we should instead use an alternative list. We can't use a secret as the `secrets.` context is not available in the runs-on stanza, so we have to have a hard-coded list in the workflow file :( This is as secure as the runner still checks the author against it's own list.

add dynamic fields to snowflake connection add dynamic fields to snowflake connection add dynamic fields to snowflake connection

# This is the 1st commit message: adds password field for aws secret # This is the commit message apache#2: adds password field for aws secret

|

I attempted to rebase my branch onto apache master and it added all commits that have been merged this week. |

Adds form fields and custom form behavior for the Snowflake connection so it is more obvious to new users what fields need to be filled out. I have divided the connection inputs into three main categories and created three proposals. The code in this PR implements proposal 1. Also the doc_strings for the hook are updated to reflect the params along with helpful information using sphinx directives.

Fields/inputs Used by the average user

Fields/inputs not used by user

Fields/inputs not used by user but reflected in code(not used by average user)

Proposal 1

screenshot:

Proposal 2

Proposal 3