Inside the RRDtool plugin

The RRDtool plugin is one of collectd's most complex plugins. The reason for this is that it has been tuned to work well in big setups, where updating RRD-files causes serious IO-problems. A detailed description of the problem can be found in the Tuning RRDTool for performance article in the RRDTool wiki.

As noted above, updating RRD-files is IO intensive, because only very little data is written with every update and the places that are accessed are not sequential. As long as all RRD-files (or at least the relevant parts of all RRD-files) fit into memory, the operating system's cache does a good job and usually IO is not a problem. If you have more files, you'll run into problems because HDDs aren't good for random access and from what we hear NAND-flash based SSDs aren't there yet, either.

All these tiny, non-sequential IO-operations are sometimes referred to as "IO-hell". Due to the way hard disks work, they have a very hard time with such an access pattern and their transfer rate will drop to maybe 2 MByte/s - if you have good hardware.

Of course, one could increase the interval in which data is collected, but nobody wants that...

Instead of increasing the interval in which data is collected, we increase the interval in which data is written to disk.

Updating one value in an RRD file writes 8 bytes of data to the file. (We'll neglect the data that's written to the head of the RRD file, because there's nothing we can do about that.) To change these 8 bytes in the file, one block (512 bytes for HDDs, usually more for NAND-flash SSDs) has to be read from disk, updated, and written to disk again. Assuming that the machine doesn't have enough memory to hold all the files, updating this file 60 times requires 60 read and write operations.

Updating 60 values at once writes 480 bytes to the file. For this, one or two blocks need to be read from disk and written back, resulting in a maximum of two read and write operations. And the second block is right behind the first one, reading/writing that one doesn't really count because it's an sequential access and therefore very fast.

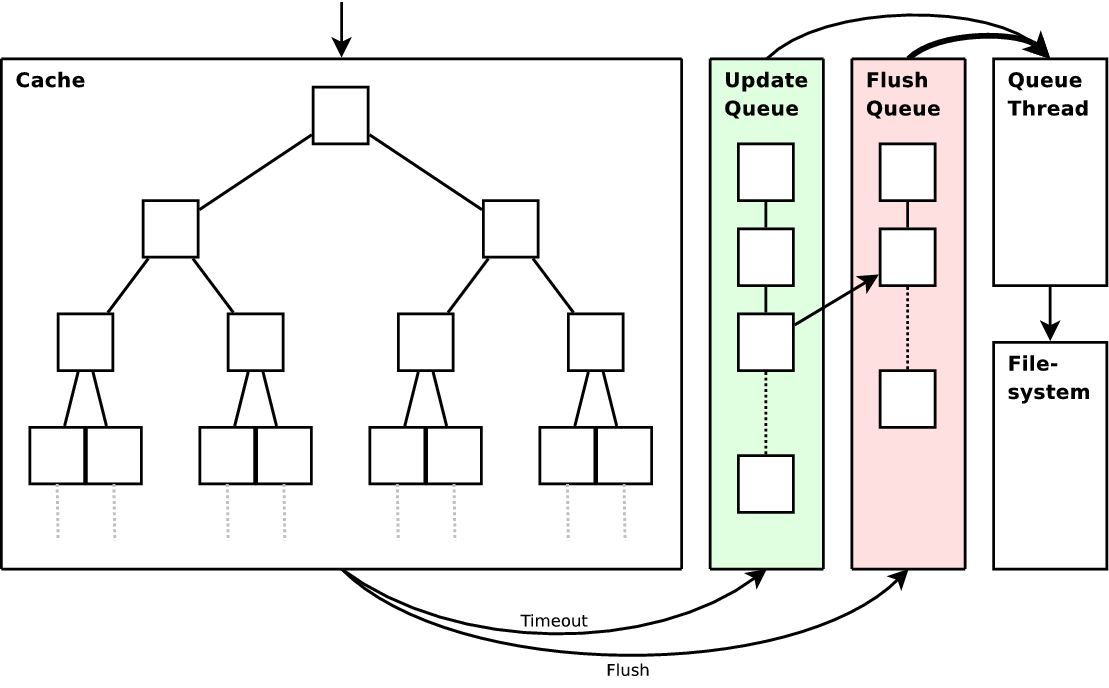

To cache the values in memory, the plugin uses a self-balancing binary search tree. Each node corresponds to an RRD file and holds the values that have not yet been written to the file. When a new value is added to a node, the plugin compares the timestamp on the oldest value with the timestamp on the value which is currently being inserted. If the difference (the "age" of the node) is too high, the node is put in the "update queue".

To cache the values in memory, the plugin uses a self-balancing binary search tree. Each node corresponds to an RRD file and holds the values that have not yet been written to the file. When a new value is added to a node, the plugin compares the timestamp on the oldest value with the timestamp on the value which is currently being inserted. If the difference (the "age" of the node) is too high, the node is put in the "update queue".

If a value is received for a node that's already in the update queue, the node in the queue will be updated, so that the pending write operation will include this new value as well. It's not implemented like this, but think of this as if a node is either in the cache or in the queue, but not in both at the same time.

A separate “queue thread” dequeues values from the update queue and writes them to the appropriate RRD file.

If values are enqueued to the update queue at a higher rate than the queue thread can dequeue them and write to RRD files, new values go into nodes already enqueued and multiple values will be combined in one update of the RRD file. So even if your hardware can't keep up with the amount of data you want to write to disk, collectd can and will act as a dynamically growing buffer between your values and the RRD files on disk.

As it is right now, your system can now handle almost arbitrarily large volumes of data, but the queue thread will run constantly and believe me, it's very very good at what it's doing. Your system will be so busy writing to RRD files, you won't be able to use it for anything else. Generating graphs from the RRD files on such a system is no fun.

And even if the queue thread is not running constantly, for example because you have set the timeout to a high value, all values tend to reach the right “age” at the same time. Imaging that all updates were evenly distributed over the five minutes after which you write the values to disk. In the morning the backup will run and IO will be a lot slower. The update queue will grow and when the backup is done most values will reach the timeout age at the same time.

And even if the queue thread is not running constantly, for example because you have set the timeout to a high value, all values tend to reach the right “age” at the same time. Imaging that all updates were evenly distributed over the five minutes after which you write the values to disk. In the morning the backup will run and IO will be a lot slower. The update queue will grow and when the backup is done most values will reach the timeout age at the same time.

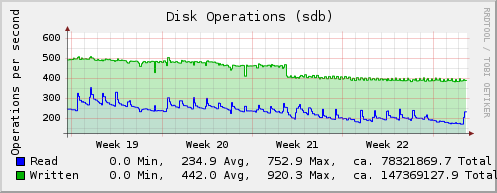

A solution to this problem is to throttle the speed at which RRD files are written. This isn't exactly what the RRDtool plugin does, because actually the rate at which nodes are dequeued from the update queue is limited, but it basically has the same effect. So if, for example, your hardware can handle 100 updates per second (this number is not unrealistically low!), throttle the plugin to 50 updates per second. It will take a little longer for all the values to be written on disk (assuming the timeout is set to a high value), but your system will remain usable.

If in the previous paragraph you thought "What, it will take even longer until I can see the data?!?", you've spotted the problem with the solution so far: What good is a very high resolution, if it takes an hour for the data to actually show up in graphs? Not at all, of course, and this is where the last concept comes into play: Flushing.

The idea behind "flushing" is, that the number of values is much higher than the number of times someone actually looks at the graph generated from that data. Why should the daemon write to the file system every ten seconds (8640 times a day!), if the graph is only looked at twice a day? Wouldn't it make much more sense to write to the file system only when needed? This is what we mean with "flushing".

The RRDtool plugin can be told to write all values for one RRD file to disk right now (to "flush" the values). If the "FLUSH" request for a node is received, it is put into the "flush queue". If the node was already in the update queue, it is removed from there and enqueued in the flush queue instead. The queue thread handles the flush queue with absolute priority, i. e. nodes are only dequeued from the update queue if the flush queue is empty. This is the reason why dequeuing from the update queue can be limited: All files that were flushed are written to disk at the highest possible speed, not regarding the "speed limit" imposed on the update queue.

To send the "FLUSH" command to the RRDtool plugin, load the UnixSock plugin. Connect to the UNIX domain socket it opens and send the command as described in Plain-text-protocol and collectd-unixsock(5). The sample graphing script in contrib/, collection3, can automatically send the "FLUSH" command before drawing a graph. If you need a pointer how to send the command with your own graphing solution, take a look at that script.

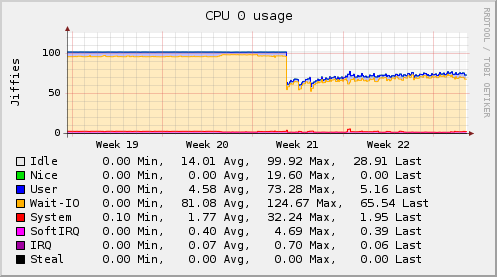

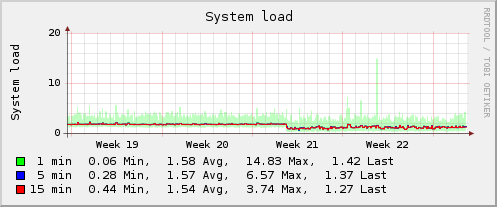

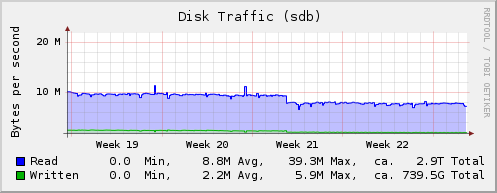

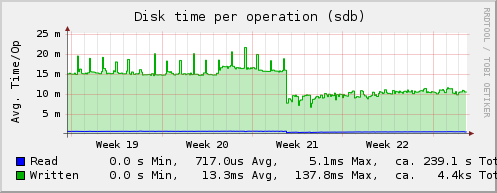

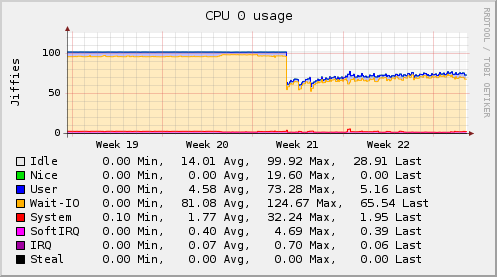

The following graphs are from a system that has been upgraded from collectd 4.4 to collectd 4.6. With the new version, the WritesPerSecond configuration option of the RRDtool plugin has been activated and set to 50 write operations per second. The overall system performance increased by a lot, especially since the system now has some spare time to create graphs and similar.