-

Notifications

You must be signed in to change notification settings - Fork 8

Introduction

The MOSIM Framework is an open modular framework for efficient and interactive simulation and analysis of realistic human motions for professional applications. It provides a simulation environment to combine task descriptions with behavior modeling and motion simulation in a modular and extendible fashion.

In classical approaches, motions are simulated directly in the target engine. In the example of a manufacturing simulation of a car inside of the Unity Game Engine, all components would be implemented directly in the engine environment. Components are thus are not necessarily exchangeable with other simulation engines. For example, motions generated in RAMSIS or IPS IMMA are not directly applicable in Unity and vice-versa. The MOSIM Framework is focused on human simulation only, hence all other components inside a simulation (e.g. robots, production lines, etc.) are not simulated inside of the MOSIM Framework, but have to be simulated by another component (e.g. the FMI Framework) and are indirectly coupled in the target engine.

The MOSIM Framework aims to separate motion simulation, task description, and behavior modeling in an engine agnostic simulation framework, allowing for the modular combination of different simulation frameworks and exchange of simulation models. The framework describes several components, among others:

- The Target Engine

- maintains the 3D scene (scene graph),

- contains the Target Avatar, which is simulated with the Framework

- controls and integrates the simulation of other components (e.g. robots, production line, FMI simulations, etc.) which are not simulated by the MOSIM framework

- provides a central clock

-

Modular Motion Unity (MMUs) simulate human motions

- for a given goal (e.g. where to reach to, where to walk to, where to point at)

- based on the current state of the simulation

- with different techniques (e.g. kinematic models, physical simulations, neural networks)

- and provide the result in discrete time steps (e.g. new pose based on a simulation duration of 0.03 seconds)

- A Co-Simulation is used to

- schedule the execution of MMUs

- combine results of parallel executed MMUs

- manage constraints of MMUs

- A Behavior Model is used to

- incorporate higher-level task descriptions

- provide information on the parameterization of MMUs to the co-simulator

- create a logical order of MMUs to solve a higher-level task

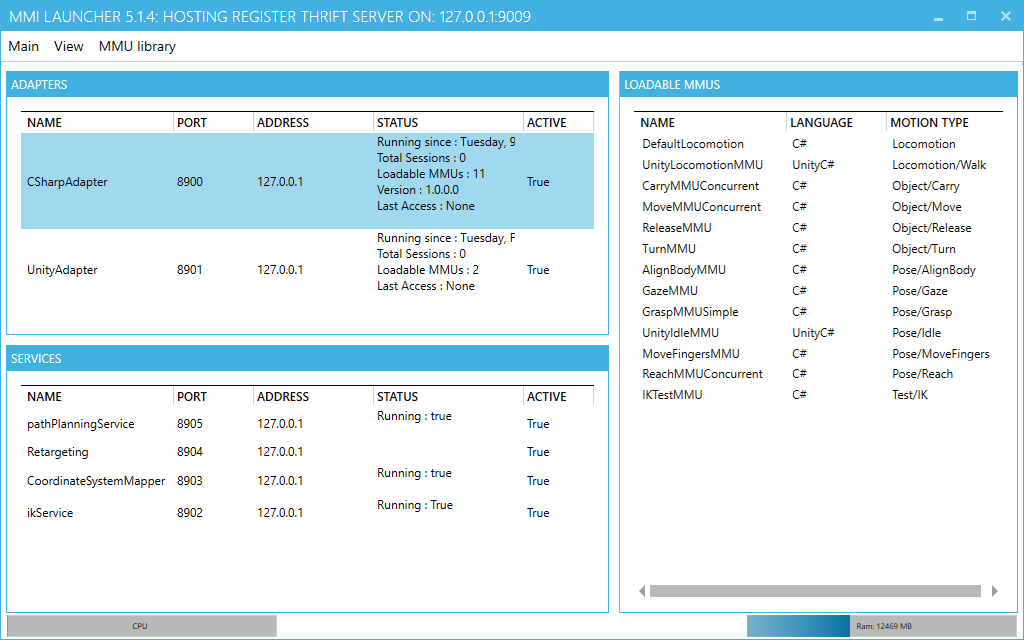

- Central Registry (Launcher)

- gathers all running services, adapters and MMUs

- can used to exchange information (e.g. ports and IP addresses)

-

Adapters are used to

- connects to the central registry (launcher)

- load MMUs from file system

- provide assistance for connecting MMUs and Services

As these frameworks are implemented in various programming languages, remote procedure calls with the Apache Thrift software are used to communicate between components.

A complete description of the technical architecture and its components can be found in the next page.

The MOSIM Frameworks operates with distributed components communicating with remote function calls over TCP / IP using the Apache Thrift protocol. In order for it to work, all services and MMUs must be started first. To assist with that procedure, we do recommend using the MMI Launcher on a windows system to start. Upon starting the launcher, all the other components will be started in separate windows and inside the launcher, all running components are visible as seen below.

In order to use and execute motions, a target engine project is required. The target engine is the central simulation component, which host the geometric scene and calls the individual components during runtime. We provide target engine integration to Unity and Unreal Engine. As a start, we do recommend using the example scenes in the Unity Demos Repository. The Unity scene must be annotated as described in the documentation in order to be able to transfer information to all other components. As a start, we do recommend using the example scenes provided in the github (e.g. `Assets / MMI / Scenes / SingleMMUs / SingleMMU_ManualDemo.unity). Upon executing the unity game, it will connect to the launcher and load all components. Via the TCP/IP connection and Apache Thrift, the scene information is shared with all adapters and the "Initialize" function of all MMUs is called remotely, containing the specifics of the Avatar to be simulated.

To execute MMUs and generate motions, an instruction must be assigned to the co-simulator, which in turn assigns instructions to the individual MMUs. Instructions are of type MInstruction and contain information about the motion type to be used (and thus which MMU to utilize), as well as any constraints and properties required by the MMU to be executed. In the demos repository there are multiple examples of instructions, e.g. in the TestSingleMMU.cs file for the SingleMMUs scene:

{

MInstruction walkInstruction = new MInstruction(MInstructionFactory.GenerateID(), "Walk", MOTION_WALK)

{

Properties = PropertiesCreator.Create("TargetID", UnitySceneAccess.Instance.GetSceneObjectByName("WalkTarget").ID)

};

MInstruction idleInstruction = new MInstruction(MInstructionFactory.GenerateID(), "Idle", MOTION_IDLE)

{

//Start idle after walk has been finished

StartCondition = walkInstruction.ID + ":" + mmiConstants.MSimulationEvent_End //synchronization constraint similar to bml "id:End" (bml original: <bml start="id:End"/>

};

this.CoSimulator.Abort();

MSimulationState currentState = new MSimulationState() { Initial = this.avatar.GetPosture(), Current = this.avatar.GetPosture() };

//Assign walk and idle instruction

this.CoSimulator.AssignInstruction(walkInstruction, currentState);

this.CoSimulator.AssignInstruction(idleInstruction, currentState);

}This instruction, for example, wants the character to walk to the WalkTarget position and start the Idle MMU, once the target is reached.

The Co-Simulator will evaluate, whether the prerequisites of the required MMUs is fulfilled and will call the AssingInstruction function of the MMU remotely via thrift. It will hence execute in its specific process (e.g. in the CsAdapter or UnityAdapter programm started with the launcher). If there are errors thrown inside the target engine, we do recommend checking the respective adapter for more information).

When a MMU has an instruction assigned and is running, it will be called on the DoStep method every frame by the co-simulator and should produce the next pose of the animation. Again, the target engine querries the Co-Simulator, which in turn manages all of the MMUs, merging their result to a single posture. As everything operates via remote function calls, the actual code to simulate the motion is not running within the target engine.

The result will be sent back to the target engine and displayed inside the target engine. Once a MMU is finished with its execution and has reached the assigned state, it should send an end-event to indicate its completion. Afterwards, it will not be called by the Co-Simulation again, until the next instruction was assigned.

- What is the MOSIM Framework?

- Components of the Framework

- Repository Structure

- RPCs with Apache Thrift

- Setting up the MMI-Environment

- MMU Development

- Target Engine Integration

- Integration in Unity

- Integration in UE4

- Integration in 3rd Party Engines

- Service Development

-

Intermediate Skeleton & Retargeting

- Concept of the Intermediate Skeleton

- Retargeting Service and Configurator

- Retargeting in Unity

- Retargeting in UE4

- Constraints