-

Notifications

You must be signed in to change notification settings - Fork 21.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[ONNX] Add binary_cross_entropy_with_logits op to ONNX opset version 12 #49675

[ONNX] Add binary_cross_entropy_with_logits op to ONNX opset version 12 #49675

Commits on Dec 17, 2020

-

Configuration menu - View commit details

-

Copy full SHA for 170908b - Browse repository at this point

Copy the full SHA 170908bView commit details -

Configuration menu - View commit details

-

Copy full SHA for 203e181 - Browse repository at this point

Copy the full SHA 203e181View commit details

Commits on Dec 22, 2020

-

Configuration menu - View commit details

-

Copy full SHA for 29dd23f - Browse repository at this point

Copy the full SHA 29dd23fView commit details -

Configuration menu - View commit details

-

Copy full SHA for f7c63eb - Browse repository at this point

Copy the full SHA f7c63ebView commit details

Commits on Dec 23, 2020

-

[te] Fix bugs with shift operators (pytorch#49396)

Summary: Pull Request resolved: pytorch#49396 Pull Request resolved: pytorch#49271 Two things: 1. These throw exceptions in their constructor, which causes a segfault (*), so move the exceptions to ::make. 2. They technically support FP types but the rules are complicated so let's not bother. (*) The reason for the segfault: all Exprs including these inherit from KernelScopedObject, whose constructor adds the object to a list for destruction at the end of the containing KernelArena's lifetime. But if the derived-class constructor throws, the object is deleted even though it's still in the KernelArena's list. So when the KernelArena is itself deleted, it double-frees the pointer and dies. I've also fixed And, Or, and Xor in this diff. ghstack-source-id: 118594998 Test Plan: `buck test //caffe2/test:jit` Reviewed By: bwasti Differential Revision: D25512052 fbshipit-source-id: 42670b3be0cc1600dc5cda6811f7f270a2c88bba

Configuration menu - View commit details

-

Copy full SHA for 086fcf6 - Browse repository at this point

Copy the full SHA 086fcf6View commit details -

[static runtime] refine fusion group (pytorch#49340)

Summary: Pull Request resolved: pytorch#49340 This refines the fusion group to include on certain types of operations. We cannot safely handle "canRunNatively" types and the memonger pass causes regressions on some internal models, so it was disabled (to be revisited with proper memory optimization once Tensor pools are implemented) Test Plan: ``` buck test mode/no-gpu caffe2/test:static_runtime buck test //caffe2/benchmarks/static_runtime:static_runtime_cpptest ``` Reviewed By: ZolotukhinM Differential Revision: D25520105 fbshipit-source-id: add61d103e4f8b4615f5402e760893ef759a60a9

Configuration menu - View commit details

-

Copy full SHA for 43aa3be - Browse repository at this point

Copy the full SHA 43aa3beView commit details -

[JIT] Support multiple outputs in subgraph matcher. (pytorch#48992)

Summary: Pull Request resolved: pytorch#48992 Differential Revision: D25388100 Test Plan: Imported from OSS Reviewed By: heitorschueroff Pulled By: ZolotukhinM fbshipit-source-id: d95713af2220cf4f99ac92f59f8e5b902f2f3822

Mikhail Zolotukhin authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 837ac43 - Browse repository at this point

Copy the full SHA 837ac43View commit details -

[numpy] torch.{all/any} : output dtype is always bool (pytorch#47878)

Summary: BC-breaking note: This PR changes the behavior of the any and all functions to always return a bool tensor. Previously these functions were only defined on bool and uint8 tensors, and when called on uint8 tensors they would also return a uint8 tensor. (When called on a bool tensor they would return a bool tensor.) PR summary: pytorch#44790 (comment) Fixes 2 and 3 Also Fixes pytorch#48352 Changes * Output dtype is always `bool` (consistent with numpy) **BC Breaking (Previously used to match the input dtype**) * Uses vectorized version for all dtypes on CPU * Enables test for complex * Update doc for `torch.all` and `torch.any` TODO * [x] Update docs * [x] Benchmark * [x] Raise issue on XLA Pull Request resolved: pytorch#47878 Reviewed By: H-Huang Differential Revision: D25421263 Pulled By: mruberry fbshipit-source-id: c6c681ef94004d2bcc787be61a72aa059b333e69

Configuration menu - View commit details

-

Copy full SHA for 4bdc202 - Browse repository at this point

Copy the full SHA 4bdc202View commit details -

Replace THError() check in THCTensorMathReduce.cu with C10_CUDA_KERNE…

…L_LAUNCH_CHECK() (pytorch#49424) Summary: Pull Request resolved: pytorch#49424 As per conversation in this [comment](https://www.internalfb.com/intern/diff/D25541113 (https://github.com/pytorch/pytorch/commit/e2510a0b60232aba5160ceb18b6ece8c59a9b79d)/?dest_fbid=393026838623691&transaction_id=3818008671564312) on D25541113 (pytorch@e2510a0), although THError does more than just log any errors associated cuda kernel launches, we're going to go ahead and replace it with C10_CUDA_KERNEL_LAUNCH_CHECK, so as to be consistent throughout the code base. Standardization FTW. This commit is purposefully sent in as a single file change so it can be easily reverted if it introduces a regression. Test Plan: Checked that the code still builds with ``` buck build //caffe2/aten:ATen-cu ``` Also ran basic aten tests ``` buck test //caffe2/aten:atest ``` Reviewed By: r-barnes Differential Revision: D25567863 fbshipit-source-id: 1093bfe2b6ca6b9a3bfb79dcdc5d713f6025eb77

Amogh Akshintala authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 6428593 - Browse repository at this point

Copy the full SHA 6428593View commit details -

Fix include files for out-of-tree compilation (pytorch#48827)

Summary: Signed-off-by: caozhong <zhong.z.cao@intel.com> Pull Request resolved: pytorch#48827 Reviewed By: agolynski Differential Revision: D25375988 Pulled By: ailzhang fbshipit-source-id: a8d5ab4572d991d6d96dfe758011517651ff0a6b

Configuration menu - View commit details

-

Copy full SHA for 3e6bdd1 - Browse repository at this point

Copy the full SHA 3e6bdd1View commit details -

Add flag torch_jit_disable_warning_prints to allow disabling all warn…

…ings.warn (pytorch#49313) Summary: Adding a flag torch_jit_disable_warning_prints to optimize interpreter performance by suppressing (potentially large amount) of warnings.warn. This is to work around TorchScript's warning behavior mismatch with Python. Python by default triggers a warning once per location but TorchScript doesn't support it. This causes same warning to trigger and print once per inference run, hurting performance. Pull Request resolved: pytorch#49313 Reviewed By: SplitInfinity Differential Revision: D25534274 Pulled By: gmagogsfm fbshipit-source-id: eaeb57a335c3e6c7eb259671645db05d781e80a2

Configuration menu - View commit details

-

Copy full SHA for 9058e5f - Browse repository at this point

Copy the full SHA 9058e5fView commit details -

[DPER] Introduce barrier operation to force synchronization of thread…

…s in async execution (pytorch#49322) Summary: Pull Request resolved: pytorch#49322 In some cases async execution might loose dependencies (Alias like ops) or produce suboptimal scheduling when there is an option which parts to schedule first. Example of the later behavior can happen in ModelParallel training where copy can get lower priority compared to the rest of the execution on the given GPU, which will caused other GPUs to starve. This operator allows to address these issues by introducing extra explicit dependencies between ops. Test Plan: Unit-test/ E2E testing in the future diffs. Reviewed By: xianjiec Differential Revision: D24933471 fbshipit-source-id: 1668994c7856d73926cde022378a99e1e8db3567

Configuration menu - View commit details

-

Copy full SHA for f360b23 - Browse repository at this point

Copy the full SHA f360b23View commit details -

[FX] Rename Node._uses and refactor Node.all_input_nodes (pytorch#49415)

Summary: Pull Request resolved: pytorch#49415 Test Plan: Imported from OSS Reviewed By: zdevito Differential Revision: D25565341 Pulled By: jamesr66a fbshipit-source-id: 2290ab62572632788809ba16319578bf0c0260ee

James Reed authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 4c667a1 - Browse repository at this point

Copy the full SHA 4c667a1View commit details -

[PyTorch] Use plain old function pointer for RecordFunctionCallback (…

…reapply) (pytorch#49408) Summary: Pull Request resolved: pytorch#49408 Nearly every non-test callsite doesn't need to capture any variables anyway, and this saves 48 bytes per callback. ghstack-source-id: 118665808 Test Plan: Wait for GitHub CI since we had C++14-specific issues with this one in previous PR pytorch#48629 Reviewed By: malfet Differential Revision: D25563207 fbshipit-source-id: 6a2831205917d465f8248ca37429ba2428d5626d

Configuration menu - View commit details

-

Copy full SHA for 1c9a0bf - Browse repository at this point

Copy the full SHA 1c9a0bfView commit details -

[CMake] Use libtorch_cuda list defined in bzl file (pytorch#49429)

Summary: Since NCCL is an optional CUDA dependency, remove nccl.cpp from the core filelist Pull Request resolved: pytorch#49429 Reviewed By: nikithamalgifb Differential Revision: D25569883 Pulled By: malfet fbshipit-source-id: 61371a4c6b0438e4e0a7f094975b9a9f9ffa4032

Configuration menu - View commit details

-

Copy full SHA for 4558c13 - Browse repository at this point

Copy the full SHA 4558c13View commit details -

update breathe (pytorch#49407)

Summary: Fixes pytorch#47462, but not completely. Update breathe to the latest version to get fixes for the "Unable to resolve..." issues. There are still some build errors, but much fewer than before. Pull Request resolved: pytorch#49407 Reviewed By: izdeby Differential Revision: D25562163 Pulled By: glaringlee fbshipit-source-id: 91bfd9e9ac70723816309f489022d72853f5fdc5

Configuration menu - View commit details

-

Copy full SHA for 6275612 - Browse repository at this point

Copy the full SHA 6275612View commit details -

[StaticRuntime] Permute_out (pytorch#49447)

Summary: Pull Request resolved: pytorch#49447 Adding an out variant for `permute`. It's better than fixing the copy inside contiguous because 1) we can leverage the c2 math library, 2) contiguous creates a tensor inside the function which isn't managed by the MemoryPlanner in StaticRuntime Test Plan: Benchmark: ``` After: I1214 12:35:32.218775 991920 PyTorchPredictorBenchLib.cpp:209] PyTorch run finished. Milliseconds per iter: 0.0902339. Iters per second: 11082.3 Before: I1214 12:35:43.368770 992620 PyTorchPredictorBenchLib.cpp:209] PyTorch run finished. Milliseconds per iter: 0.0961521. Iters per second: 10400.2 ``` Reviewed By: yinghai Differential Revision: D25541666 fbshipit-source-id: 013ed0d4080cd01de4d3e1b031ab51e5032e6651

Hao Lu authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 7439f10 - Browse repository at this point

Copy the full SHA 7439f10View commit details -

fix optimizer.pyi typo 'statue'->'state' (pytorch#49388)

Summary: Pull Request resolved: pytorch#49388 Test Plan: Imported from OSS Reviewed By: zou3519 Differential Revision: D25553672 Pulled By: glaringlee fbshipit-source-id: e9f2233bd678a90768844af2d8d5e2994d59e304

lixinyu authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for edea937 - Browse repository at this point

Copy the full SHA edea937View commit details -

[StaticRuntime] Fusion pass for ClipRanges/GatherRanges/LengthsToOffs…

…ets (pytorch#49113) Summary: Pull Request resolved: pytorch#49113 Reviewed By: ajyu Differential Revision: D25388512 fbshipit-source-id: 3daa5b9387a3a10b6c220688df06540c4d844aea

Hao Lu authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 1f1c0f5 - Browse repository at this point

Copy the full SHA 1f1c0f5View commit details -

quantized tensor: add preliminary support for advanced indexing, try 2 (

pytorch#49346) Summary: Pull Request resolved: pytorch#49346 This is less ambitious redo of pytorch#49129. We make the ``` xq_slice = xq[:, [0], :, :] ``` indexing syntax work if `xq` is a quantized Tensor. For now, we are making the code not crash, with an in efficient `dq -> index -> q` implementation. A future PR can optimize performance by removing the unnecessary memory copies (which will require some non-trivial changes to TensorIterator). Test Plan: ``` python test/test_quantization.py TestQuantizedOps.test_advanced_indexing ``` Imported from OSS Reviewed By: jerryzh168 Differential Revision: D25539365 fbshipit-source-id: 98485875aaaf5743e1a940e170258057691be4fa

Configuration menu - View commit details

-

Copy full SHA for b1547e4 - Browse repository at this point

Copy the full SHA b1547e4View commit details -

Unescape string in RPC error message (pytorch#49373)

Summary: Pull Request resolved: pytorch#49373 Unescaping the string in RPC error message to provide better error msg Test Plan: CI Reviewed By: xush6528 Differential Revision: D25511730 fbshipit-source-id: 054f46d5ffbcb1350012362a023fafb1fe57fca1

Configuration menu - View commit details

-

Copy full SHA for 28a5455 - Browse repository at this point

Copy the full SHA 28a5455View commit details -

[StaticRuntime][ATen] Add out variant for narrow_copy (pytorch#49449)

Summary: Pull Request resolved: pytorch#49449 Similar to permute_out, add the out variant of `aten::narrow` (slice in c2) which does an actual copy. `aten::narrow` creates a view, however, an copy is incurred when we call `input.contiguous` in the ops that follow `aten::narrow`, in `concat_add_mul_replacenan_clip`, `casted_batch_one_hot_lengths`, and `batch_box_cox`. {F351263599} Test Plan: Unit test: ``` buck test //caffe2/aten:native_test ``` Benchmark with the adindexer model: ``` bs = 1 is neutral Before: I1214 21:32:51.919239 3285258 PyTorchPredictorBenchLib.cpp:209] PyTorch run finished. Milliseconds per iter: 0.0886948. Iters per second: 11274.6 After: I1214 21:32:52.492352 3285277 PyTorchPredictorBenchLib.cpp:209] PyTorch run finished. Milliseconds per iter: 0.0888019. Iters per second: 11261 bs = 20 shows more gains probably because the tensors are bigger and therefore the cost of copying is higher Before: I1214 21:20:19.702445 3227229 PyTorchPredictorBenchLib.cpp:209] PyTorch run finished. Milliseconds per iter: 0.527563. Iters per second: 1895.51 After: I1214 21:20:20.370173 3227307 PyTorchPredictorBenchLib.cpp:209] PyTorch run finished. Milliseconds per iter: 0.508734. Iters per second: 1965.67 ``` Reviewed By: bwasti Differential Revision: D25554109 fbshipit-source-id: 6bae62e6ce3456ff71559b635cc012fdcd1fdd0e

Hao Lu authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for bc97e02 - Browse repository at this point

Copy the full SHA bc97e02View commit details -

Revert D25554109: [StaticRuntime][ATen] Add out variant for narrow_copy

Test Plan: revert-hammer Differential Revision: D25554109 (pytorch@ed04b71) Original commit changeset: 6bae62e6ce34 fbshipit-source-id: bfa038e150166d0116bcae8f7a6415d98d4146de

Hao Lu authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 1479e05 - Browse repository at this point

Copy the full SHA 1479e05View commit details -

Making ops c10 full: optional out arguments (pytorch#49083)

Summary: Pull Request resolved: pytorch#49083 We have some (but very few) ops that take optional out arguments `Tensor(a!)? out`. This PR makes them non-optional mandatory arguments and enables c10-fullness for them. There is only a very small number of ops affected by this. Putting this up for discussion. Alternatives considered: If we keep them optional, we run into lots of issues in the dispatcher. We have to decide what the dispatcher calling convention for this argument type should be. 1) If we keep passing them in as `Tensor&` arguments and return them as `tuple<Tensor&, Tensor&, Tensor&>`, so basically same as currently, then the schema inference check will say "Your kernel function got inferred to have a `Tensor` argument but your native_functions.yaml declaration says `Tensor?`. This is a mismatch, you made an error". We could potentially disable that check, but that would open the door for real mistakes to not be reported anymore in the future. This sounds bad. 2) If we change them to a type that schema inference could differentiate from `Tensor`, say we pass them in as `const optional<Tensor>&` and return them as `tuple<const optional<Tensor>&, const optional<Tensor>&, const optional<Tensor>&>`, then our boxing logic fails because it can't recognize those as out overloads anymore and shortcut the return value as it is doing right now. We might be able to rewrite the boxing logic, but that could be difficult and could easily develop into a rabbit hole of having to clean up `Tensor&` references throughout the system where we use them. Furthermore, having optional out arguments in C++ doesn't really make sense. the C++ API puts them to the front of the argument list, so you can't omit them anyways when calling an op. You would be able to omit them when calling from Python with out kwargs, but not sure if we want that discrepancy between the c++ and python API. ghstack-source-id: 118660075 Test Plan: waitforsandcastle Reviewed By: ezyang Differential Revision: D25422197 fbshipit-source-id: 3cb25c5a3d93f9eb960d70ca014bae485be9f058

Configuration menu - View commit details

-

Copy full SHA for 00e3716 - Browse repository at this point

Copy the full SHA 00e3716View commit details -

Making ops c10-full: optional lists (pytorch#49088)

Summary: Pull Request resolved: pytorch#49088 We had special case logic to support `int[]?` and `double[]?` but nothing for `DimnameList[]?`. This PR generalizes the logic to support optional lists so it should now work with all types. It also enables c10-fullness for ops that were blocked by this. Note that using these arguments in a signature was always and still is expensive because the whole list needs to be copied. We should probably consider alternatives in the future like for example using `torch::List` instead of `ArrayRef`, that could work without copying the list. ghstack-source-id: 118660071 Test Plan: waitforsandcastle Reviewed By: ezyang Differential Revision: D25423901 fbshipit-source-id: dec58dc29f3bb4cbd89e2b95c42da204a9da2e0a

Configuration menu - View commit details

-

Copy full SHA for bdfa87e - Browse repository at this point

Copy the full SHA bdfa87eView commit details -

[PyTorch] Avoid move-constructing a List in listConstruct (pytorch#49355

) Summary: Pull Request resolved: pytorch#49355 List's move ctor is a little bit more expensive than you might expect, but we can easily avoid it. ghstack-source-id: 118624596 Test Plan: Roughly 1% improvement on internal benchmark. Reviewed By: hlu1 Differential Revision: D25542190 fbshipit-source-id: 08532642c7d1f1604e16c8ebefd1ed3e56f7c919

Configuration menu - View commit details

-

Copy full SHA for 076d62f - Browse repository at this point

Copy the full SHA 076d62fView commit details -

Enhanced generators with grad-mode decorators (pytorch#49017)

Summary: This PR addresses the feature request outlined in pytorch#48713 for two-way communication with enhanced generators from [pep-342](https://www.python.org/dev/peps/pep-0342/). Briefly, the logic of the patch resembles `yield from` [pep-380](https://www.python.org/dev/peps/pep-0380/), which cannot be used, since the generator **must be interacted with from within the grad-mode context**, while yields from the decorator **must take place outside of the context**. Hence any interaction with the wrapped generator, be it via [.send](https://docs.python.org/3/reference/expressions.html?highlight=throw#generator.send), [.throw](https://docs.python.org/3/reference/expressions.html?highlight=throw#generator.throw), and even [.close](https://docs.python.org/3/reference/expressions.html?highlight=throw#generator.close) must be wrapped by a `with` clause. The patch is compatible with `for i in gen: pass` and `next(gen)` use cases and allows two-way communication with the generator via `.send <-> yield` points. ### Logic At lines [L37-L38](https://github.com/ivannz/pytorch/blob/2d40296c0c6617b3980c86762be466c995aa7f8e/torch/autograd/grad_mode.py#L37-L38) we (the decorator) **start the wrapped generator** (coroutine) by issuing `None` into it (equivalently, we can use `next(get)` here). Then we **dispatch responses of the generator** to our ultimate caller and **relay the latter's requests** into the generator in the loop on lines [L39-L52](https://github.com/ivannz/pytorch/blob/2d40296c0c6617b3980c86762be466c995aa7f8e/torch/autograd/grad_mode.py#L39-L52). We yield the most recent response on [L40-L41](https://github.com/ivannz/pytorch/blob/2d40296c0c6617b3980c86762be466c995aa7f8e/torch/autograd/grad_mode.py#L40-L41), at which point we become **paused**, waiting for the next ultimate caller's interaction with us. If the caller **sends us a request**, then we become unpaused and move to [L51-L52](https://github.com/ivannz/pytorch/blob/2d40296c0c6617b3980c86762be466c995aa7f8e/torch/autograd/grad_mode.py#L51-L52) and **forward it into the generator**, at which point we pause, waiting for its response. The response might be a value, an exception or a `StopIteration`. In the case of an exception from the generator, we let it **bubble up** from the immediately surrounding [except clause](https://docs.python.org/3/reference/compound_stmts.html#the-try-statement) to the ultimate caller through the [outer try-except](https://github.com/ivannz/pytorch/blob/2dc287bba87fa6f05c49446c0239ffdcdb1e896e/torch/autograd/grad_mode.py#L36-L54). In the case of a `StopIteration`, we **take it's payload and propagate it** to the caller via [return](https://github.com/ivannz/pytorch/blob/2d40296c0c6617b3980c86762be466c995aa7f8e/torch/autograd/grad_mode.py#L54). In the case of a value, the flow and the loop continues. The caller **throwing an exception at us** is handled much like a proper request, except for the exception playing the role of the request. In this case we **forward it into the generator** on lines [L47-L49](https://github.com/ivannz/pytorch/blob/2d40296c0c6617b3980c86762be466c995aa7f8e/torch/autograd/grad_mode.py#L47-L49) and await its response. We explicitly **advance** the traceback one frame up, in order to indicate the **source of the exception within the generator**. Finally the `GeneratorExit` is handled on lines [L42-L45](https://github.com/ivannz/pytorch/blob/2d40296c0c6617b3980c86762be466c995aa7f8e/torch/autograd/grad_mode.py#L42-L45) and closes the generator. Updates: clarified exception propagation Pull Request resolved: pytorch#49017 Reviewed By: izdeby Differential Revision: D25567796 Pulled By: albanD fbshipit-source-id: 801577cccfcb2b5e13a08e77faf407881343b7b0

Configuration menu - View commit details

-

Copy full SHA for 197266d - Browse repository at this point

Copy the full SHA 197266dView commit details -

webdataset prototype - ListDirFilesIterableDataset (pytorch#48944)

Summary: Pull Request resolved: pytorch#48944 This is a stack PR for webdataset prototype. I am trying to make each stack a separate dataset. To make the implementation simple, each dataset will only support the basic functionality. - [x] ListDirFilesDataset - [x] LoadFilesFromDiskIterableDataset - [x] ReadFilesFromTarIterableDataset - [x] ReadFilesFromZipIterableDataset - [x] RoutedDecoderIterableDataset Test Plan: Imported from OSS Reviewed By: izdeby Differential Revision: D25541277 Pulled By: glaringlee fbshipit-source-id: 9e738f6973493f6be1d5cc1feb7a91513fa5807c

lixinyu authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 5165093 - Browse repository at this point

Copy the full SHA 5165093View commit details -

webdataset prototype - LoadFilesFromDiskIterableDataset (pytorch#48955)

Summary: Pull Request resolved: pytorch#48955 Test Plan: Imported from OSS Reviewed By: izdeby Differential Revision: D25541393 Pulled By: glaringlee fbshipit-source-id: dea6ad64a7ba40abe45612d99f078b14d1da8bbf

lixinyu authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 9745362 - Browse repository at this point

Copy the full SHA 9745362View commit details -

CUDA BFloat embedding (pytorch#44848)

Summary: Pull Request resolved: pytorch#44848 Reviewed By: izdeby Differential Revision: D25574204 Pulled By: ngimel fbshipit-source-id: b35f7253a6ad2b83f7b6b06862a5ab77295373e0

Configuration menu - View commit details

-

Copy full SHA for bf3d1b4 - Browse repository at this point

Copy the full SHA bf3d1b4View commit details -

Instantiate PackedConvWeight to avoid linking error (pytorch#49442)

Summary: Pull Request resolved: pytorch#49442 When moving Aten/native to app level, symbols from native/quantized may sit in a target away from some of its call sites. As a result, there are linking errors of missing symbols of instantiations of PackedConvWeight::prepack. The solution is to instantiate PackedConvWeight in the same compilation unit. It's similar to D24941989 (pytorch@fe6bb2d). ghstack-source-id: 118676374 Test Plan: CI Reviewed By: dhruvbird Differential Revision: D25576703 fbshipit-source-id: d6e3d11d51d8172ab8487ce44ec8c042889f0f11

Configuration menu - View commit details

-

Copy full SHA for a213e48 - Browse repository at this point

Copy the full SHA a213e48View commit details -

.circleci: downgrade conda-package-handling to 1.6.0 (pytorch#49434)

Summary: Pull Request resolved: pytorch#49434 There was a bug that was introduced in conda-package-handling >= 1.6.1 that makes archives above a certain size fail out when attempting to extract see: conda/conda-package-handling#71 coincides with pytorch/builder#611 Signed-off-by: Eli Uriegas <eliuriegas@fb.com> Test Plan: Imported from OSS Reviewed By: xuzhao9, janeyx99, samestep Differential Revision: D25573390 Pulled By: seemethere fbshipit-source-id: 82173804f1b30da6e4b401c4949e2ee52065e149

Configuration menu - View commit details

-

Copy full SHA for d73c1f4 - Browse repository at this point

Copy the full SHA d73c1f4View commit details -

[Docs] Updating init_process_group docs to indicate correct rank range (

pytorch#49131) Summary: Pull Request resolved: pytorch#49131 Users frequently assume the correct range of ranks is 1 ... `world_size`. This PR udpates the docs to indicate that the correct rank range users should specify is 0 ... `world_size` - 1. Test Plan: Rendering and Building Docs Reviewed By: mrshenli Differential Revision: D25410532 fbshipit-source-id: fe0f17a4369b533dc98543204a38b8558e68497a

Configuration menu - View commit details

-

Copy full SHA for 98c4a4d - Browse repository at this point

Copy the full SHA 98c4a4dView commit details -

[c10d Store] Store Python Docs Fixes (pytorch#49130)

Summary: Pull Request resolved: pytorch#49130 The Python Store API docs had some typos, where boolean value were lower case, which is incorrect Python syntax. This diff fixes those typos. Test Plan: Built and Rendered Docs Reviewed By: mrshenli Differential Revision: D25411492 fbshipit-source-id: fdbf1e6b8f81e9589e638286946cad68eb7c9252

Configuration menu - View commit details

-

Copy full SHA for e9c93eb - Browse repository at this point

Copy the full SHA e9c93ebView commit details -

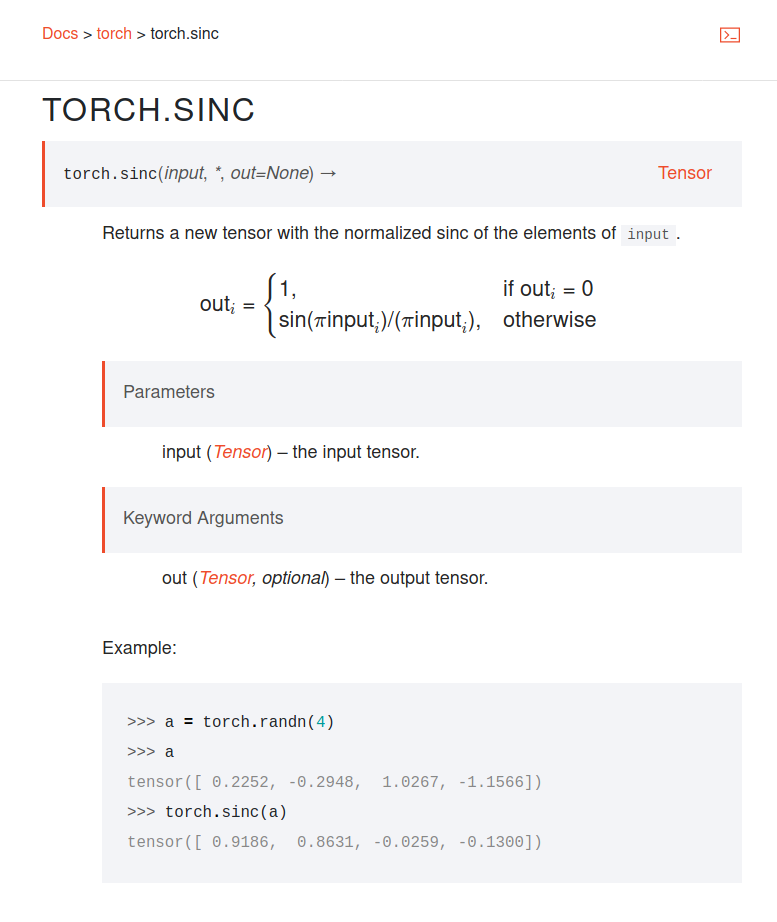

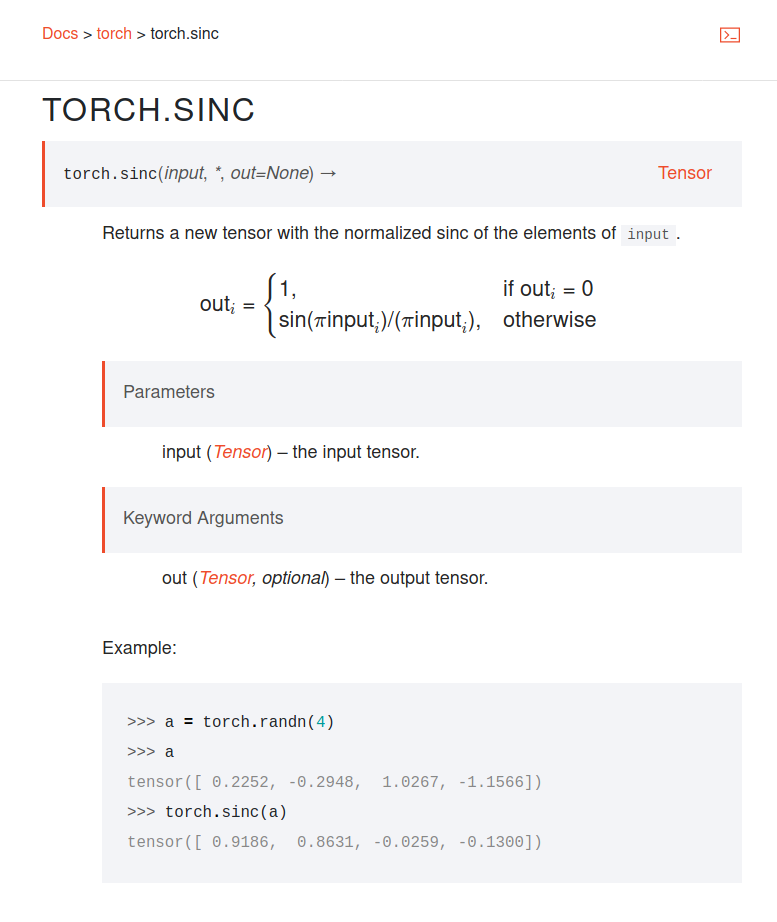

Add sinc operator (pytorch#48740)

Summary: Implements the sinc operator. See https://numpy.org/doc/stable/reference/generated/numpy.sinc.html  Pull Request resolved: pytorch#48740 Reviewed By: izdeby Differential Revision: D25564477 Pulled By: soulitzer fbshipit-source-id: 13f36a2b84dadfb4fd1442a2a40a3a3246cbaecb

Configuration menu - View commit details

-

Copy full SHA for 6cb4910 - Browse repository at this point

Copy the full SHA 6cb4910View commit details -

Revert "Revert D24923679: Fixed einsum compatibility/performance issu…

…es (pytorch#46398)" (pytorch#49189) Summary: Pull Request resolved: pytorch#49189 This reverts commit d307601 and fixes the bug with diagonals and ellipsis combined. Test Plan: Imported from OSS Reviewed By: glaringlee Differential Revision: D25540722 Pulled By: heitorschueroff fbshipit-source-id: 86d0c9a7dcfda600b546457dad102af2ff33e353

Configuration menu - View commit details

-

Copy full SHA for 7b4218c - Browse repository at this point

Copy the full SHA 7b4218cView commit details -

[caffe2][autograd] Avoid extensive -Wunused-variable warnings on _any…

…_requires_grad (pytorch#49167) Summary: Pull Request resolved: pytorch#49167 Building with clang and a fair warning level can result in hundreds of lines of compiler output of the form: ``` caffe2\gen_aten_libtorch\autograd\generated\VariableType_1.cpp(2279,8): warning: unused variable '_any_requires_grad' [-Wunused-variable] auto _any_requires_grad = compute_requires_grad( self ); ^ caffe2\gen_aten_libtorch\autograd\generated\VariableType_1.cpp(2461,8): warning: unused variable '_any_requires_grad' [-Wunused-variable] auto _any_requires_grad = compute_requires_grad( grad_output, self ); ^ caffe2\gen_aten_libtorch\autograd\generated\VariableType_1.cpp(2677,8): warning: unused variable '_any_requires_grad' [-Wunused-variable] auto _any_requires_grad = compute_requires_grad( self ); ^ ... ``` This happens when requires_derivative == False. Let's mark `_any_requires_grad` as potentially unused. If this were C++17 we would use `[[maybe_unused]]` but to retain compatibility with C++11 we just mark it with `(void)`. Test Plan: CI + locally built Reviewed By: ezyang Differential Revision: D25421548 fbshipit-source-id: c56279a184b1c616e8717a19ee8fad60f36f37d1

Configuration menu - View commit details

-

Copy full SHA for 6a56da9 - Browse repository at this point

Copy the full SHA 6a56da9View commit details -

Revert D25421263: [pytorch][PR] [numpy] torch.{all/any} : output dtyp…

…e is always bool Test Plan: revert-hammer Differential Revision: D25421263 (pytorch@c508e5b) Original commit changeset: c6c681ef9400 fbshipit-source-id: 4c0c9acf42b06a3ed0af8f757ea4512ca35b6c59

Configuration menu - View commit details

-

Copy full SHA for 5125131 - Browse repository at this point

Copy the full SHA 5125131View commit details -

Reland "Add test for empty tensors for batch matmuls" (pytorch#48797)

Summary: This reverts commit c7746ad. Fixes #{issue number} Pull Request resolved: pytorch#48797 Reviewed By: mruberry Differential Revision: D25575264 Pulled By: ngimel fbshipit-source-id: c7f3b384db833d727bb5bd8a51f1493a13016d09

Configuration menu - View commit details

-

Copy full SHA for c7ce84b - Browse repository at this point

Copy the full SHA c7ce84bView commit details -

Adding support for CuDNN-based LSTM with projections (pytorch#47725)

Summary: Fixes pytorch#46213 I didn't yet update the documentation, will add those change soon. A few other things that I didn't do, but want to clarify if I maybe should. 1. I didn't expose projections in c++ API: torch/csrc/api/src/nn/modules/rnn.cpp. Let me know if this is desirable and I will add those changes. 2. I didn't expose projections in "lstm_cell" function and "_thnn_differentiable_lstm_cell_backward" functions from aten/src/ATen/native/RNN.cpp. As far as I understand, they are not needed for nn.LSTM CPU execution. For lstm_cell, projections don't bring any real benefit, since if cell is used separately, it can be easily added in Python. For "_thnn_differentiable_lstm_cell_backward", I'm actually not sure where exactly that function is used, so I also disabled projections there for now. Please let me know if I should change that. 3. I added check that projections are not supported for quantized LSTMs to quantized_lstm_<data/input> functions. But I didn't add any checks to LSTMCell code. It seems that since I disabled projections in "lstm_cell" function, they should also not be available for quantized models through any other API than quantized_lstm_<data/input>. Please let me know if I'm not correct and I will add checks to other places. 4. Projections are not supported for CuDNN versions < 7.1.2. Should I add the check for CuDNN version and disable projections in that case? If so, what will be the best way to do that? 5. Currently I added projection weight as the last weight, so the layout is "w_ih, w_hh, b_ih, b_hh, w_hr". This breaks the assumption that biases come after weights and thus I had to add additional if-s in various places. Alternative way would be to have "w_ih, w_hh, w_hr, b_ih, b_hh" layout, in which case the assumption will be true. But in that case I will need to split the loop in get_parameters function from aten/src/ATen/native/cudnn/RNN.cpp. And in some cases, I will still need to add an "undefined" tensor in the 3rd position, because we get all 5 weights from CuDNN most of the time. So I'm not sure which way is better. Let me know if you think I should change to the weights-then-biases layout. Pull Request resolved: pytorch#47725 Reviewed By: zou3519 Differential Revision: D25449794 Pulled By: ngimel fbshipit-source-id: fe6ce59e481d1f5fd861a8ff7fa13d1affcedb0c

Igor Gitman authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 1352101 - Browse repository at this point

Copy the full SHA 1352101View commit details -

Move inplace_is_vmap_compatible to BatchedTensorImpl.h (pytorch#49118)

Summary: Pull Request resolved: pytorch#49118 I need this in the next stack up. It seems useful to have as a helper function. Test Plan: - run tests Reviewed By: izdeby Differential Revision: D25563546 Pulled By: zou3519 fbshipit-source-id: a4031fdc4b2373cc230ba3c66738d91dcade96e2

Configuration menu - View commit details

-

Copy full SHA for 0991d63 - Browse repository at this point

Copy the full SHA 0991d63View commit details -

Update accumulate_grad to support vmap (pytorch#49119)

Summary: Pull Request resolved: pytorch#49119 I don't know how the accumulate_grad code gets hit via calling autograd.grad, so I went through all places in accumulate_grad that are definitely impossible to vmap through and changed them. To support this: - I added vmap support for Tensor::strides(). It returns the strides that correspond to the public dimensions of the tensor (not the ones being vmapped over). - Changed an instance of empty_strided to new_empty_strided. - Replaced an in-place operation in accumulate_grad.h Test Plan: - added a test for calling strides() inside of vmap - added tests that exercise all of the accumulate_grad code path. NB: I don't know why these tests exercise the code paths, but I've verified that they do via gdb. Suggestions for some saner test cases are very welcome. Reviewed By: izdeby Differential Revision: D25563543 Pulled By: zou3519 fbshipit-source-id: 05ac6c549ebd447416e6a07c263a16c90b2ef510

Configuration menu - View commit details

-

Copy full SHA for b2acf95 - Browse repository at this point

Copy the full SHA b2acf95View commit details -

Update TensorPipe submodule (pytorch#49467)

Summary: Pull Request resolved: pytorch#49467 Credit to beauby for the Bazel fixes. Test Plan: Export and run on CI Reviewed By: beauby Differential Revision: D25588027 fbshipit-source-id: efe1c543eb7438ca05254de67cf8b5cee625119a

Configuration menu - View commit details

-

Copy full SHA for da5c385 - Browse repository at this point

Copy the full SHA da5c385View commit details -

Add docs/README.md to make existing doc build info more discoverable (p…

…ytorch#49286) Summary: Closes pytorchgh-42003 Pull Request resolved: pytorch#49286 Reviewed By: glaringlee Differential Revision: D25535250 Pulled By: ezyang fbshipit-source-id: a7790bfe4528fa6a31698126cc687793fdf7ac3f

Configuration menu - View commit details

-

Copy full SHA for 94344a2 - Browse repository at this point

Copy the full SHA 94344a2View commit details -

Updated derivative rules for complex svd and pinverse (pytorch#47761)

Summary: Updated `svd_backward` to work correctly for complex-valued inputs. Updated `common_methods_invocations.py` to take dtype, device arguments for input construction. Removed `test_pinverse` from `test_autograd.py`, it is replaced by entries to `common_methods_invocations.py`. Added `svd` and `pinverse` to list of complex tests. References for complex-valued SVD differentiation: - https://giggleliu.github.io/2019/04/02/einsumbp.html - https://arxiv.org/abs/1909.02659 The derived rules assume gauge invariance of loss functions, so the result would not be correct for loss functions that are not gauge invariant. https://re-ra.xyz/Gauge-Problem-in-Automatic-Differentiation/ The same rule is implemented in Tensorflow and [BackwardsLinalg.jl](https://github.com/GiggleLiu/BackwardsLinalg.jl). Ref. pytorch#33152 Pull Request resolved: pytorch#47761 Reviewed By: izdeby Differential Revision: D25574962 Pulled By: mruberry fbshipit-source-id: 832b61303e883ad3a451b84850ccf0f36763a6f6

Configuration menu - View commit details

-

Copy full SHA for 6315a7e - Browse repository at this point

Copy the full SHA 6315a7eView commit details -

[quant][docs] Add fx graph mode quantization to quantization docs (py…

…torch#49211) Summary: Pull Request resolved: pytorch#49211 Test Plan: Imported from OSS Reviewed By: raghuramank100 Differential Revision: D25507480 fbshipit-source-id: 9e9e4b5fef979f5621c1bbd1b49e9cc6830da617

Configuration menu - View commit details

-

Copy full SHA for bbaa6bb - Browse repository at this point

Copy the full SHA bbaa6bbView commit details -

stft: Change require_complex warning to an error (pytorch#49022)

Summary: Pull Request resolved: pytorch#49022 Test Plan: Imported from OSS Reviewed By: ngimel Differential Revision: D25569586 Pulled By: mruberry fbshipit-source-id: 09608088f540c2c3fc70465f6a23f2aec5f24f85

Configuration menu - View commit details

-

Copy full SHA for 0d82603 - Browse repository at this point

Copy the full SHA 0d82603View commit details -

Revert D25564477: [pytorch][PR] Add sinc operator

Test Plan: revert-hammer Differential Revision: D25564477 (pytorch@bbc7143) Original commit changeset: 13f36a2b84da fbshipit-source-id: 58cbe8109efaf499dd017531878b9fbbb27976bc

Configuration menu - View commit details

-

Copy full SHA for 0176da6 - Browse repository at this point

Copy the full SHA 0176da6View commit details -

Making ops c10-full: Storage arguments (pytorch#49146)

Summary: Pull Request resolved: pytorch#49146 Add support for Storage arguments to IValue and the JIT typing system, and make ops that were blocked on that c10-full. ghstack-source-id: 118710665 (Note: this ignores all push blocking failures!) Test Plan: waitforsandcastle Reviewed By: ezyang Differential Revision: D25456799 fbshipit-source-id: da14f125af352de5fcf05a83a69ad5a69d5a3b45

Configuration menu - View commit details

-

Copy full SHA for 8dcd580 - Browse repository at this point

Copy the full SHA 8dcd580View commit details -

Allow zero annealing epochs (pytorch#47579)

Summary: Fixes pytorch#47578. Pull Request resolved: pytorch#47579 Reviewed By: H-Huang Differential Revision: D25429403 Pulled By: vincentqb fbshipit-source-id: c42fbcd71b46e07c672a1e9661468848ac16de38

Configuration menu - View commit details

-

Copy full SHA for 6f50a18 - Browse repository at this point

Copy the full SHA 6f50a18View commit details -

Revert D25507480: [quant][docs] Add fx graph mode quantization to qua…

…ntization docs Test Plan: revert-hammer Differential Revision: D25507480 (pytorch@7729581) Original commit changeset: 9e9e4b5fef97 fbshipit-source-id: fdb08d824209b97defaba2e207d1a914575a6ae7

Mike Ruberry authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 3bbc766 - Browse repository at this point

Copy the full SHA 3bbc766View commit details -

Fix link in distributed contributing doc and add link (pytorch#49141)

Summary: One of the links for ramp up tasks wasn't showing any results and the other was only RPC results. Instead of this, I just changed it to one link that has `pt_distributed_rampup` which seems reasonable as the developer will be able to see both RPC and distributed tasks. Also added test command for DDP tests. Pull Request resolved: pytorch#49141 Reviewed By: ezyang Differential Revision: D25597560 Pulled By: rohan-varma fbshipit-source-id: 85d7d2964a19ea69fe149c017cf88dff835b164a

Configuration menu - View commit details

-

Copy full SHA for e7b6a29 - Browse repository at this point

Copy the full SHA e7b6a29View commit details -

Add note to torch docs for sinh/cosh (pytorch#49413)

Summary: Address pytorch#48641 Documents the behavior of sinh and cosh in the edge cases ``` >>> b = torch.full((15,), 89, dtype=torch.float32) >>> torch.sinh(b) tensor([2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38]) >>> b = torch.full((16,), 89, dtype=torch.float32) >>> torch.sinh(b) tensor([inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf]) >>> b = torch.full((17,), 89, dtype=torch.float32) >>> torch.sinh(b) tensor([ inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, inf, 2.2448e+38]) >>> b = torch.full((32,), 89, dtype=torch.float32)[::2] >>> torch.sinh(b) tensor([2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38, 2.2448e+38]) ``` See https://sleef.org/purec.xhtml Pull Request resolved: pytorch#49413 Reviewed By: ezyang Differential Revision: D25587932 Pulled By: soulitzer fbshipit-source-id: 6db75c45786f4b95f82459d0ce5efa37ec0774f0

Configuration menu - View commit details

-

Copy full SHA for 470a9cf - Browse repository at this point

Copy the full SHA 470a9cfView commit details -

Refine

ConvParams::use_nnpack()(pytorch#49464)Summary: NNPACK convolution algorithms can only be used for kernels up to 16x16 Fixes pytorch#49462 Pull Request resolved: pytorch#49464 Reviewed By: xuzhao9 Differential Revision: D25587879 Pulled By: malfet fbshipit-source-id: 658197f23c08cab97f0849213ecee3f91f96c932

Configuration menu - View commit details

-

Copy full SHA for ce124c2 - Browse repository at this point

Copy the full SHA ce124c2View commit details -

T66557700 Support default argument values of a method (pytorch#48863)

Summary: Pull Request resolved: pytorch#48863 Support default arguments when invoking a module via PyTorch Lite (`mobile::Module`). Test Plan: buck test mode/dbg //caffe2/test/cpp/jit:jit -- LiteInterpreterTest.MethodInvocation buck test mode/dbg caffe2/test:mobile -- test_method_calls_with_optional_arg Reviewed By: raziel, iseeyuan Differential Revision: D25152559 fbshipit-source-id: bbf52f1fbdbfbc6f8fa8b65ab524b1cd4648f9c0

Configuration menu - View commit details

-

Copy full SHA for 0998854 - Browse repository at this point

Copy the full SHA 0998854View commit details -

[PyTorch] Merge CoinflipTLS into RecordFunctionTLS (pytorch#49359)

Summary: Pull Request resolved: pytorch#49359 This should be both slightly more efficient (1 less TLS guard check in at::shouldRunRecordFunction) and definitely more correct (CoinflipTLS is now saved whenever RecordFunctionTLS is saved), fixing a bad merge that left RecordFunctionTLS::tries_left dead. ghstack-source-id: 118624402 Test Plan: Review, CI Reviewed By: hlu1 Differential Revision: D25542799 fbshipit-source-id: 310f9fd157101f659cea13c331b2a0ee6db2db88

Configuration menu - View commit details

-

Copy full SHA for c971a62 - Browse repository at this point

Copy the full SHA c971a62View commit details -

[PyTorch] Avoid extra Tensor refcounting in _cat_out_cpu (pytorch#49364)

Summary: Pull Request resolved: pytorch#49364 We had a local `Tensor` when we only needed a `const Tensor&`. ghstack-source-id: 118624595 Test Plan: Internal benchmark. Reviewed By: hlu1 Differential Revision: D25544731 fbshipit-source-id: 7b9656d0371ab65a6313cb0ad4aa1df707884c1c

Configuration menu - View commit details

-

Copy full SHA for 4df68b3 - Browse repository at this point

Copy the full SHA 4df68b3View commit details -

[PyTorch] Use .sizes() instead of .size() in _cat_out_cpu (pytorch#49368

) Summary: Pull Request resolved: pytorch#49368 The former is faster because it doesn't allow negative indexing (which we don't use). ghstack-source-id: 118624598 Test Plan: internal benchmark Reviewed By: hlu1 Differential Revision: D25545777 fbshipit-source-id: b2714fac95c801fd735fac25b238b4a79b012993

Configuration menu - View commit details

-

Copy full SHA for bff610b - Browse repository at this point

Copy the full SHA bff610bView commit details -

[PyTorch] Use .sizes() isntead of .size() in cat_serial_kernel_impl (p…

…ytorch#49371) Summary: Pull Request resolved: pytorch#49371 As with previous diff, .sizes() is strictly more efficient. ghstack-source-id: 118627223 Test Plan: internal benchmark Differential Revision: D25546409 fbshipit-source-id: 196034716b6e11efda1ec8cb1e0fce7732d73eb4

Configuration menu - View commit details

-

Copy full SHA for 51e4cc9 - Browse repository at this point

Copy the full SHA 51e4cc9View commit details -

[PyTorch] Make tls_local_dispatch_key_set inlineable (reapply) (pytor…

…ch#49412) Summary: Pull Request resolved: pytorch#49412 FLAGS_disable_variable_dispatch had to go, but it looks like the only user was some benchmarks anyway. ghstack-source-id: 118669590 Test Plan: Small (order of 0.1% improvement) on Internal benchmarks. Wait for GitHub CI since this was reverted before due to CI break Reviewed By: ezyang Differential Revision: D25547962 fbshipit-source-id: 58424b1da230fdc5d27349af762126a5512fce43

Configuration menu - View commit details

-

Copy full SHA for e70d3f0 - Browse repository at this point

Copy the full SHA e70d3f0View commit details -

BFloat16: add explicit dtype support for to_mkldnn and to_dense (pyto…

…rch#48881) Summary: Pull Request resolved: pytorch#48881 Test Plan: Imported from OSS Reviewed By: ngimel Differential Revision: D25537190 Pulled By: VitalyFedyunin fbshipit-source-id: a61a433c638e2e95576f88f081b64ff171b2316e

Configuration menu - View commit details

-

Copy full SHA for fb4da16 - Browse repository at this point

Copy the full SHA fb4da16View commit details -

Introduce tools.codegen.api.translate (pytorch#49122)

Summary: Pull Request resolved: pytorch#49122 cpparguments_exprs has induced a lot of head scratching in many recent PRs for how to structure the code in a good way. This PR eliminates the old algorithm for an entirely new algorithm inspired by logic programming. The net result is shorter, cleaner and should be more robust to future changes. This PR is a bit of a whopper. Here is the order to review it. - tools/codegen/api/types.py - Deleted CppArgument, CppArgumentPackIface (and subclasses), CppExpr, DispatcherExpr, DispatcherArgument, NativeExpr, NativeArgument, MetaArgument. All things previously called XArgument are now Binding. All things previously called XExpr are now Expr. I deleted the `__str__` implementation on Binding and fixed all call sites not to use it. On Binding, I renamed `str_no_default` and `str_default` to `defn` and `decl` for better symmetry with the corresponding signature concepts, although I'm open to naming them back to their original versions. - Obviously, things are less type safe without the class distinctions. So I introduce a new ADT called CType. CType represents the *semantic C++ type* of a binding: it is both the C++ type (e.g., `const Tensor&`) as well as the argument name that specifies what the binding denotes (e.g., `other`). Every binding now records its CType. The key observation here is that you don't actually care if a given expression is from the cpp or dispatcher or native API; what you care is having enough information to know what the expression means, so you can use it appropriately. CType has this information. For the most part, ArgNames are just the string names of the arguments as you see them in JIT schema, but there is one case (`possibly_redundant_memory_format`) where we encode a little extra information. Unlike the plain strings we previously used to represent C++ types, CType have a little bit of structure around optional and references, because the translation code needs to work around these concepts. - I took the opportunity to kill all of the private fields like `_arguments` and `_returns_type` (since the argument types don't make sense anymore). Everything is computed for you on the fly. If this is a perf problem in codegen we can start using `cached_property` decorator. - All of the heavy lifting in CppSignature.argument_packs has been moved to the cpp module. We'll head over there next. Similarly, all of the exprs methods are now calling translate, the new functionality which we haven't gotten to yet - tools/codegen/api/cpp.py - We refactor all of the type computation functions to return CType instead of str. Because CTypes need to know the denotation, there is a new `binds: ArgName` argument to most functions that provides the denotation, so we can slot it in. (An alternative would have been to construct CTypes without denotations and then fill them in post-facto, but I didn't do it this way. One downside is there are some places where I need a CType without denotation, so I fill these in with `__placeholder__` whenever this happens). - `argument` and `arguments` are now extremely simple. There is no more Pack business, just produce one or more Bindings. The one thing of note is that when both a `memory_format` and `options` are in scope, we label the memory format as `possibly_redundant_memory_format`. This will be used in translation - tools/codegen/api/dispatcher.py and tools/codegen/api/native.py - same deal as cpp.py. One thing is that `cpparguments_exprs` is deleted; that is in the translator - tools/codegen/api/translate.py - the translator! It uses a very simple backwards deduction engine to work out how to fill in the arguments of functions. There are comments in the file that explain how it works. - Everything else: just some small call site tweaks for places when I changed API. Signed-off-by: Edward Z. Yang <ezyang@fb.com> Test Plan: Imported from OSS Reviewed By: ljk53 Differential Revision: D25455887 Pulled By: ezyang fbshipit-source-id: 90dc58d420d4cc49281aa8647987c69f3ed42fa6

Configuration menu - View commit details

-

Copy full SHA for 15bc45f - Browse repository at this point

Copy the full SHA 15bc45fView commit details -

Revert D25569586: stft: Change require_complex warning to an error

Test Plan: revert-hammer Differential Revision: D25569586 (pytorch@5874925) Original commit changeset: 09608088f540 fbshipit-source-id: 6a5953b327a4a2465b046e29bb007a0c5f4cf14a

Mike Ruberry authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for c482c5d - Browse repository at this point

Copy the full SHA c482c5dView commit details -

[NNC] Dont inline outputs buffers on cpu (pytorch#49488)

Summary: In pytorch#48967 we enabled output buffer inlining, which results in duplicate computation if one output depends on another. This was done to fix correctness for CUDA, but is not needed for correctness for CPU and results in perf slowdown. The output buffer inlining solution for CUDA is intended to be an interim solution because it does not work with reductions. Pull Request resolved: pytorch#49488 Reviewed By: ezyang Differential Revision: D25596071 Pulled By: eellison fbshipit-source-id: bc3d987645da5ce3c603b4abac3586b169656cfd

Elias Ellison authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for fb0a942 - Browse repository at this point

Copy the full SHA fb0a942View commit details -

Prevent accidentally writing old style ops (pytorch#49510)

Summary: Pull Request resolved: pytorch#49510 Adding old style operators with out arguments will break XLA. This prevents that. See for background: https://fb.workplace.com/groups/pytorch.dev/permalink/809934446251704/ This is a temporary change that will prevent this breakage for the next couple of days until the problem is resolved for good. It will be deleted in pytorch#49164 then. ghstack-source-id: 118756437 (Note: this ignores all push blocking failures!) Test Plan: waitforsandcastle Reviewed By: bhosmer Differential Revision: D25599112 fbshipit-source-id: 6b0ca4da4b55da8aab9d1b332cd9f68e7602301e

Configuration menu - View commit details

-

Copy full SHA for c694e7d - Browse repository at this point

Copy the full SHA c694e7dView commit details -

.circleci: Only downgrade if we have conda (pytorch#49519)

Summary: Signed-off-by: Eli Uriegas <eliuriegas@fb.com> Fixes #{issue number} Pull Request resolved: pytorch#49519 Reviewed By: robieta Differential Revision: D25603779 Pulled By: seemethere fbshipit-source-id: ca8d811925762a5a413ca906d94c974a4ac5b132Configuration menu - View commit details

-

Copy full SHA for b39b6cb - Browse repository at this point

Copy the full SHA b39b6cbView commit details -

Fix bad error message when int overflow (pytorch#48250)

Summary: Fixes pytorch#48114 Before: ``` >>> torch.empty(2 * 10 ** 20) Traceback (most recent call last): File "<stdin>", line 1, in <module> TypeError: empty(): argument 'size' must be tuple of ints, but found element of type int at pos 1 ``` After fix: ``` >>> torch.empty(2 * 10 ** 20) Traceback (most recent call last): File "<stdin>", line 1, in <module> RuntimeError: Overflow when unpacking long ``` Unclear whether we need a separate test for this case, I can add one if it's necessary... Pull Request resolved: pytorch#48250 Reviewed By: linbinyu Differential Revision: D25105217 Pulled By: ezyang fbshipit-source-id: a5aa7c0266945c8125210a2fd34ce4b6ba940c92

Configuration menu - View commit details

-

Copy full SHA for 3be7381 - Browse repository at this point

Copy the full SHA 3be7381View commit details -

Relax the atol/rtol of layernorm math kernel test. (pytorch#49507)

Summary: Pull Request resolved: pytorch#49507 Test Plan: Imported from OSS Reviewed By: mruberry Differential Revision: D25598424 Pulled By: ailzhang fbshipit-source-id: b3f43e84f177cf7c14831b0b83a399b155c813c4

Ailing Zhang authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 12c9616 - Browse repository at this point

Copy the full SHA 12c9616View commit details -

Fix CUDA extension ninja build (pytorch#49344)

Summary: I am submitting this PR on behalf of Janne Hellsten(nurpax) from NVIDIA, for the convenience of CLA. Thanks Janne a lot for the contribution! Currently, the ninja build decides whether to rebuild a .cu file or not pretty randomly. And there are actually two issues: First, the arch list in the building command is ordered randomly. When the order changes, it will unconditionally rebuild regardless of the timestamp. Second, the header files are not included in the dependency list, so if the header file changes, it is possible that ninja will not rebuild. This PR fixes both issues. The fix for the second issue requires nvcc >= 10.2. nvcc < 10.2 can still build CUDA extension as it used to be, but it will be unable to see the changes in header files. Pull Request resolved: pytorch#49344 Reviewed By: glaringlee Differential Revision: D25540157 Pulled By: ezyang fbshipit-source-id: 197541690d7f25e3ac5ebe3188beb1f131a4c51f

Configuration menu - View commit details

-

Copy full SHA for 2aa0817 - Browse repository at this point

Copy the full SHA 2aa0817View commit details -

[extensions] fix

is_ninja_availableduring cuda extension building (p……ytorch#49443) Summary: tldr: current version of `is_ninja_available` of `torch/utils/cpp_extension.py` fails to run in the recent incarnations of pip w/ new build isolation feature which is now a default. This PR fixes this problem. The full story follows: -------------------------- Currently trying to build https://github.com/facebookresearch/fairscale/ which builds cuda extensions fails with the recent pip versions. The build is failing to perform `is_ninja_available`, which runs a simple subprocess to run `ninja --version` but does it with some /dev/null stream override which seems to break with the new pip versions. Currently I have `pip==20.3.3`. The recent pip performs build isolation which first fetches all dependencies to somewhere under /tmp/pip-install-xyz and then builds the package. If I build: ``` pip install fairscale --no-build-isolation ``` everything works. When building normally (i.e. without `--no-build-isolation`), the failure is a long long trace, <details> <summary>Full log</summary> <pre> pip install fairscale Collecting fairscale Downloading fairscale-0.1.1.tar.gz (83 kB) |████████████████████████████████| 83 kB 562 kB/s Installing build dependencies ... done Getting requirements to build wheel ... error ERROR: Command errored out with exit status 1: command: /home/stas/anaconda3/envs/main-38/bin/python /home/stas/anaconda3/envs/main-38/lib/python3.8/site-packages/pip/_vendor/pep517/_in_process.py get_requires_for_build_wheel /tmp/tmpjvw00c7v cwd: /tmp/pip-install-1wq9f8fp/fairscale_347f218384a64f24b8d5ce846641213e Complete output (55 lines): running egg_info writing fairscale.egg-info/PKG-INFO writing dependency_links to fairscale.egg-info/dependency_links.txt writing requirements to fairscale.egg-info/requires.txt writing top-level names to fairscale.egg-info/top_level.txt Traceback (most recent call last): File "/home/stas/anaconda3/envs/main-38/bin/ninja", line 5, in <module> from ninja import ninja ModuleNotFoundError: No module named 'ninja' Traceback (most recent call last): File "/home/stas/anaconda3/envs/main-38/lib/python3.8/site-packages/pip/_vendor/pep517/_in_process.py", line 280, in <module> main() File "/home/stas/anaconda3/envs/main-38/lib/python3.8/site-packages/pip/_vendor/pep517/_in_process.py", line 263, in main json_out['return_val'] = hook(**hook_input['kwargs']) File "/home/stas/anaconda3/envs/main-38/lib/python3.8/site-packages/pip/_vendor/pep517/_in_process.py", line 114, in get_requires_for_build_wheel return hook(config_settings) File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/setuptools/build_meta.py", line 149, in get_requires_for_build_wheel return self._get_build_requires( File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/setuptools/build_meta.py", line 130, in _get_build_requires self.run_setup() File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/setuptools/build_meta.py", line 145, in run_setup exec(compile(code, __file__, 'exec'), locals()) File "setup.py", line 56, in <module> setuptools.setup( File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/setuptools/__init__.py", line 153, in setup return distutils.core.setup(**attrs) File "/home/stas/anaconda3/envs/main-38/lib/python3.8/distutils/core.py", line 148, in setup dist.run_commands() File "/home/stas/anaconda3/envs/main-38/lib/python3.8/distutils/dist.py", line 966, in run_commands self.run_command(cmd) File "/home/stas/anaconda3/envs/main-38/lib/python3.8/distutils/dist.py", line 985, in run_command cmd_obj.run() File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/setuptools/command/egg_info.py", line 298, in run self.find_sources() File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/setuptools/command/egg_info.py", line 305, in find_sources mm.run() File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/setuptools/command/egg_info.py", line 536, in run self.add_defaults() File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/setuptools/command/egg_info.py", line 572, in add_defaults sdist.add_defaults(self) File "/home/stas/anaconda3/envs/main-38/lib/python3.8/distutils/command/sdist.py", line 228, in add_defaults self._add_defaults_ext() File "/home/stas/anaconda3/envs/main-38/lib/python3.8/distutils/command/sdist.py", line 311, in _add_defaults_ext build_ext = self.get_finalized_command('build_ext') File "/home/stas/anaconda3/envs/main-38/lib/python3.8/distutils/cmd.py", line 298, in get_finalized_command cmd_obj = self.distribution.get_command_obj(command, create) File "/home/stas/anaconda3/envs/main-38/lib/python3.8/distutils/dist.py", line 858, in get_command_obj cmd_obj = self.command_obj[command] = klass(self) File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 351, in __init__ if not is_ninja_available(): File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1310, in is_ninja_available subprocess.check_call('ninja --version'.split(), stdout=devnull) File "/home/stas/anaconda3/envs/main-38/lib/python3.8/subprocess.py", line 364, in check_call raise CalledProcessError(retcode, cmd) subprocess.CalledProcessError: Command '['ninja', '--version']' returned non-zero exit status 1. ---------------------------------------- ERROR: Command errored out with exit status 1: /home/stas/anaconda3/envs/main-38/bin/python /home/stas/anaconda3/envs/main-38/lib/python3.8/site-packages/pip/_vendor/pep517/_in_process.py get_requires_for_build_wheel /tmp/tmpjvw00c7v Check the logs for full command output. </pre> </details> and the middle of it is what we want: ``` File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 351, in __init__ if not is_ninja_available(): File "/tmp/pip-build-env-a5x2icen/overlay/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1310, in is_ninja_available subprocess.check_call('ninja --version'.split(), stdout=devnull) File "/home/stas/anaconda3/envs/main-38/lib/python3.8/subprocess.py", line 364, in check_call raise CalledProcessError(retcode, cmd) subprocess.CalledProcessError: Command '['ninja', '--version']' returned non-zero exit status 1. ``` For some reason pytorch fails to run this simple code: ``` # torch/utils/cpp_extension.py def is_ninja_available(): r''' Returns ``True`` if the `ninja <https://ninja-build.org/>`_ build system is available on the system, ``False`` otherwise. ''' with open(os.devnull, 'wb') as devnull: try: subprocess.check_call('ninja --version'.split(), stdout=devnull) except OSError: return False else: return True ``` I suspect that pip does something to `os.devnull` and that's why it fails. This PR proposes a simpler code which doesn't rely on anything but `subprocess.check_output`: ``` def is_ninja_available(): r''' Returns ``True`` if the `ninja <https://ninja-build.org/>`_ build system is available on the system, ``False`` otherwise. ''' try: subprocess.check_output('ninja --version'.split()) except Exception: return False else: return True ``` which doesn't use `os.devnull` and performs the same function. There could be a whole bunch of different exceptions there I think, so I went for the generic one - we don't care why it failed, since this function's only purpose is to suggest whether ninja can be used or not. Let's check ``` python -c "import torch.utils.cpp_extension; print(torch.utils.cpp_extension.is_ninja_available())" True ``` Look ma - no std noise to take care of. (i.e. no need for /dev/null). I was editing the installed environment-wide `cpp_extension.py` file directly, so didn't need to tweak `PYTHONPATH` - I made sure to replace `'ninja --version'.` with something that should fail and I did get `False` for the above command line. I next did a somewhat elaborate cheat to re-package an already existing binary wheel with this corrected version of `cpp_extension.py`, rather than building from source: ``` mkdir /tmp/pytorch-local-channel cd /tmp/pytorch-local-channel # get the latest nightly wheel wget https://download.pytorch.org/whl/nightly/cu110/torch-1.8.0.dev20201215%2Bcu110-cp38-cp38-linux_x86_64.whl # unpack it unzip torch-1.8.0.dev20201215+cu110-cp38-cp38-linux_x86_64.whl # edit torch/utils/cpp_extension.py to fix the python code with the new version as in this PR emacs torch/utils/cpp_extension.py & # pack the files back zip -r torch-1.8.0.dev20201215+cu110-cp38-cp38-linux_x86_64.whl caffe2 torch torch-1.8.0.dev20201215+cu110.dist-info ``` Now I tell pip to use my local channel, plus `--pre` for it to pick up the pre-release as an acceptable wheel ``` # install using this local channel git clone https://github.com/facebookresearch/fairscale/ cd fairscale pip install -v --disable-pip-version-check -e . -f file:///tmp/pytorch-local-channel --pre ``` and voila all works. ``` [...] Successfully installed fairscale ``` I noticed a whole bunch of ninja not found errors in the log, which I think is the same problem with other parts of the build system packages which also use this old check copied all over various projects and build tools, and which the recent pip breaks. ``` writing manifest file '/tmp/pip-modern-metadata-_nsdesbq/fairscale.egg-info/SOURCES.txt' Traceback (most recent call last): File "/home/stas/anaconda3/envs/main-38/bin/ninja", line 5, in <module> from ninja import ninja ModuleNotFoundError: No module named 'ninja' [...] /tmp/pip-build-env-fqflyevr/overlay/lib/python3.8/site-packages/torch/utils/cpp_extension.py:364: UserWarning: Attempted to use ninja as the BuildExtension backend but we could not find ninja.. Falling back to using the slow distutils backend. warnings.warn(msg.format('we could not find ninja.')) ``` but these don't prevent from the build completing and installing. I suppose these need to be identified and reported to various other projects, but that's another story. The new pip does something to `os.devnull` I think which breaks any code relying on it - I haven't tried to figure out what happens to that stream object, but this PR which removes its usage solves the problem. Also do notice that: ``` git clone https://github.com/facebookresearch/fairscale/ cd fairscale python setup.py bdist_wheel pip install dist/fairscale-0.1.1-cp38-cp38-linux_x86_64.whl ``` works too. So it is really a pip issue. Apologies if the notes are too many, I tried to give the complete picture and probably other projects will need those details as well. Thank you for reading. Pull Request resolved: pytorch#49443 Reviewed By: mruberry Differential Revision: D25592109 Pulled By: ezyang fbshipit-source-id: bfce4420c28b614ead48e9686f4153c6e0fbe8b7

Configuration menu - View commit details

-

Copy full SHA for dc052aa - Browse repository at this point

Copy the full SHA dc052aaView commit details -

[NNC] Add Support For is_nan (pytorch#48973)

Summary: Pull Request resolved: pytorch#48973 Test Plan: Imported from OSS Reviewed By: bertmaher Differential Revision: D25413166 Pulled By: eellison fbshipit-source-id: 0c79258345df18c60a862373fa16931228fb92ef

Elias Ellison authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 6362b78 - Browse repository at this point

Copy the full SHA 6362b78View commit details -

[NNC] add support for masked_fill (pytorch#48974)

Summary: Pull Request resolved: pytorch#48974 Test Plan: Imported from OSS Reviewed By: bertmaher Differential Revision: D25413165 Pulled By: eellison fbshipit-source-id: 8cece1dc3692389be90c0d77bd71b103254d5ad3

Elias Ellison authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for a0d6342 - Browse repository at this point

Copy the full SHA a0d6342View commit details -

Add fusion support of aten::to (pytorch#48976)

Summary: Pull Request resolved: pytorch#48976 Test Plan: Imported from OSS Reviewed By: ZolotukhinM Differential Revision: D25413164 Pulled By: eellison fbshipit-source-id: 0c31787e8b5e1368b0cba6e23660799b652389cd

Elias Ellison authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 08fd21f - Browse repository at this point

Copy the full SHA 08fd21fView commit details -

eager quant: remove fake_quant after add/mul nodes during QAT (pytorc…

…h#49213) Summary: Pull Request resolved: pytorch#49213 Changes behavior of Eager mode quantization to remove observation after add_scalar/mul_scalar. This is not used, and it removes one difference between Eager and FX modes. Test Plan: ``` python test/test_quantization.py TestQuantizeFxOps.test_quantized_add_qat python test/test_quantization.py TestQuantizeFxOps.test_quantized_mul_qat python test/test_quantization.py TestQuantizationAwareTraining.test_add_scalar_uses_input_qparams python test/test_quantization.py TestQuantizationAwareTraining.test_mul_scalar_uses_input_qparams ``` Imported from OSS Reviewed By: jerryzh168 Differential Revision: D25486276 fbshipit-source-id: 34a5d6ce0d08739319ec0f8b197cfc1309d71040

Configuration menu - View commit details

-

Copy full SHA for 5ac65cb - Browse repository at this point

Copy the full SHA 5ac65cbView commit details -

fx quant: move {input|output}_quantized_idxs cfg from convert to prep…

…are (pytorch#49238) Summary: Pull Request resolved: pytorch#49238 Moves the `input_quantized_idxs` and `output_quantized_idxs` options from the convert config to the prepare config. This is done because these operations are related to placing observers, which is numerics changing during QAT. The next PR will adjust the behavior of `input_quantized_idxs` in prepare in QAT to prevent placing a fake_quant at the input if the input is marked quantized. Placing a fake_quant there can lead to numerical inaccuracies during calibration, as it would start with scale=1 and zp=0, which may be different from the quantization parameters of the incoming quantized input. Test Plan: ``` python test/test_quantization.py TestQuantizeFx ``` Imported from OSS Reviewed By: jerryzh168 Differential Revision: D25498762 fbshipit-source-id: 17ace8f803542155652b310e5539e1882ebaadc6

Configuration menu - View commit details

-

Copy full SHA for 6c5a43d - Browse repository at this point

Copy the full SHA 6c5a43dView commit details -

fx quant: do not insert observers at quantized inputs (pytorch#49239)

Summary: Pull Request resolved: pytorch#49239 Context: the existing implementation of `quantized_input_idxs` is convert-only. Therefore, observers are inserted between the input and the first quantized node. This is a problem during QAT, because the initial input is a fake_quant, and it starts with scale=1 and zp=0. This does not match the quantization parameters of the graph input, which can lead to incorrect numerics. Fix: do not insert observer for a quantized input. Test Plan: ``` python test/test_quantization.py TestQuantizeFx ``` Imported from OSS Reviewed By: jerryzh168 Differential Revision: D25499486 fbshipit-source-id: 303b49cc9d95a9fd06fef3b0859c08be34e19d8a

Configuration menu - View commit details

-

Copy full SHA for f7a7355 - Browse repository at this point

Copy the full SHA f7a7355View commit details -

fx quant: fix fq when input is quantized and node does not need fq (p…

…ytorch#49382) Summary: Pull Request resolved: pytorch#49382 Fixes an edge case. If the input to the graph is quantized and the first node does not need activation observation, makes sure that the observer is not inserted. Test Plan: ``` python test/test_quantization.py TestQuantizeFxOps.test_int8_input_no_unnecessary_fq ``` Imported from OSS Reviewed By: jerryzh168 Differential Revision: D25551041 fbshipit-source-id: a6cba235c63ca7f6856e4128af7c1dc7fa0085ea

Configuration menu - View commit details

-

Copy full SHA for f604f1b - Browse repository at this point

Copy the full SHA f604f1bView commit details -

fx quant: make sure observer is inserted before a quantized output (p…

…ytorch#49420) Summary: Pull Request resolved: pytorch#49420 Before: if an output was marked as quantized, it could actually not be quantized, if the previous node was not quantized. After: if an output was marked as quantized, it will be quantized regardless of the quantization status of the previous node. Test Plan: ``` python test/test_quantization.py TestQuantizeFxOps.test_quant_output_always_observed ``` Imported from OSS Reviewed By: jerryzh168 Differential Revision: D25566834 fbshipit-source-id: 84755a1605fd3847edd03a7887ab9f635498c05c

Configuration menu - View commit details

-

Copy full SHA for b7a36d0 - Browse repository at this point

Copy the full SHA b7a36d0View commit details -

add files to SLOW_TESTS for target determinator (pytorch#49500)

Summary: - test_torch was split into 6 in pytorch#47356. - also test_linalg has 10 slowtest marking. Pull Request resolved: pytorch#49500 Reviewed By: ezyang, malfet Differential Revision: D25598085 Pulled By: walterddr fbshipit-source-id: 74b0b433897721db86c00e236d1dd925d7a6d3d0

Rong Rong (AI Infra) authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 1aa640b - Browse repository at this point

Copy the full SHA 1aa640bView commit details -

[reland] Support torch.distributed.irecv(src=None, ...) (pytorch#49383)

Summary: Pull Request resolved: pytorch#49383 Reland of pytorch#47137 ghstack-source-id: 118735407 Test Plan: waitforbuildbot Reviewed By: osalpekar Differential Revision: D25551910 fbshipit-source-id: 2e1f2f77e7c69204056dfe6ed178e8ad7650ab32

Configuration menu - View commit details

-

Copy full SHA for 5aed6b3 - Browse repository at this point

Copy the full SHA 5aed6b3View commit details -

Set caffe2::pthreadpool() size in ParallelOpenMP (pytorch#45566)

Summary: Addresses pytorch#45418. This is probably not the best solution, but it's a rebase of the solution we're considering until pytorch#45418 is solved. If you can outline a better one I'm willing to implement it (: Pull Request resolved: pytorch#45566 Reviewed By: ezyang Differential Revision: D24621568 Pulled By: glaringlee fbshipit-source-id: 89dad5c61d8b5c26984d401551a1fe29df1ead04

Configuration menu - View commit details

-

Copy full SHA for 46971a5 - Browse repository at this point

Copy the full SHA 46971a5View commit details -

Add torch._foreach_zero_ API (pytorch#47286)

Summary: **In this PR** - add `_foreach_zero_` API - Update all optimizers under /_multi_tensor/ to use `_foreach_zero_` in `zero_grad` method Performance improvement ----------------- OP: zero_ ----------------- for-loop: 630.36 us foreach: 90.84 us script ``` import torch import torch.optim as optim import torch.nn as nn import torchvision import torch.utils.benchmark as benchmark_utils inputs = [torch.rand(3, 200, 200, device="cuda") for _ in range(100)] def main(): for op in [ "zero_" ]: print("\n\n----------------- OP: ", op, " -----------------") stmt = "[torch.{op}(t) for t in inputs]" timer = benchmark_utils.Timer( stmt=stmt.format(op = op), globals=globals(), label="str(optimizer)", ) print(f"autorange:\n{timer.blocked_autorange()}\n\n") stmt = "torch._foreach_{op}(inputs)" timer_mta = benchmark_utils.Timer( stmt=stmt.format(op = op), globals=globals(), label="str(optimizer_mta)", ) print(f"autorange:\n{timer_mta.blocked_autorange()}\n\n") if __name__ == "__main__": main() ``` **TODO** - Refactor zero_grad once foreach APIs are stable. **Tested** via unit tests Pull Request resolved: pytorch#47286 Reviewed By: ngimel Differential Revision: D24706240 Pulled By: izdeby fbshipit-source-id: aac69d6d134d65126ae8e5916f3627b73d8a94bfIurii Zdebskyi authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 6a59ef2 - Browse repository at this point

Copy the full SHA 6a59ef2View commit details -

Bring back math_silu_backward which works for all backends. (pytorch#…

…49439) Summary: Pull Request resolved: pytorch#49439 Test Plan: Imported from OSS Reviewed By: nikithamalgifb, ngimel Differential Revision: D25594129 Pulled By: ailzhang fbshipit-source-id: 627bbea9ba478ee3a8edcc6695abab6431900192

Ailing Zhang authored and hwangdeyu committedDec 23, 2020 Configuration menu - View commit details

-

Copy full SHA for 3b1186d - Browse repository at this point

Copy the full SHA 3b1186dView commit details -

[quant][be] Add typing for quantization_mappings.py (pytorch#49179)

Summary: Pull Request resolved: pytorch#49179 Test Plan: Imported from OSS Reviewed By: vkuzo, wat3rBro Differential Revision: D25470520 fbshipit-source-id: 16e35fec9a5f3339860bd2305ae8ffdd8e2dfaf7

Configuration menu - View commit details

-

Copy full SHA for 99ba415 - Browse repository at this point

Copy the full SHA 99ba415View commit details -

Add BFloat16 support for isinf and isfinite (pytorch#49356)